This is the multi-page printable view of this section. Click here to print.

Talos Linux Guides

- 1: Installation

- 1.1: Bare Metal Platforms

- 1.1.1: Digital Rebar

- 1.1.2: Equinix Metal

- 1.1.3: ISO

- 1.1.4: Matchbox

- 1.1.5: Network Configuration

- 1.1.6: PXE

- 1.1.7: Sidero Metal

- 1.2: Virtualized Platforms

- 1.3: Cloud Platforms

- 1.3.1: AWS

- 1.3.2: Azure

- 1.3.3: DigitalOcean

- 1.3.4: Exoscale

- 1.3.5: GCP

- 1.3.6: Hetzner

- 1.3.7: Nocloud

- 1.3.8: OpenStack

- 1.3.9: Oracle

- 1.3.10: Scaleway

- 1.3.11: UpCloud

- 1.3.12: Vultr

- 1.4: Local Platforms

- 1.4.1: Docker

- 1.4.2: QEMU

- 1.4.3: VirtualBox

- 1.5: Single Board Computers

- 1.5.1: Banana Pi M64

- 1.5.2: Friendlyelec Nano PI R4S

- 1.5.3: Jetson Nano

- 1.5.4: Libre Computer Board ALL-H3-CC

- 1.5.5: Pine64

- 1.5.6: Pine64 Rock64

- 1.5.7: Radxa ROCK PI 4

- 1.5.8: Radxa ROCK PI 4C

- 1.5.9: Raspberry Pi 4 Model B

- 1.5.10: Raspberry Pi Series

- 2: Configuration

- 2.1: Configuration Patches

- 2.2: Containerd

- 2.3: Custom Certificate Authorities

- 2.4: Disk Encryption

- 2.5: Editing Machine Configuration

- 2.6: Logging

- 2.7: Managing Talos PKI

- 2.8: NVIDIA Fabric Manager

- 2.9: NVIDIA GPU (OSS drivers)

- 2.10: NVIDIA GPU (Proprietary drivers)

- 2.11: Pull Through Image Cache

- 2.12: Role-based access control (RBAC)

- 2.13: System Extensions

- 3: How Tos

- 3.1: How to enable workers on your control plane nodes

- 3.2: How to scale down a Talos cluster

- 3.3: How to scale up a Talos cluster

- 4: Network

- 4.1: Corporate Proxies

- 4.2: Network Device Selector

- 4.3: Virtual (shared) IP

- 4.4: Wireguard Network

- 5: Discovery Service

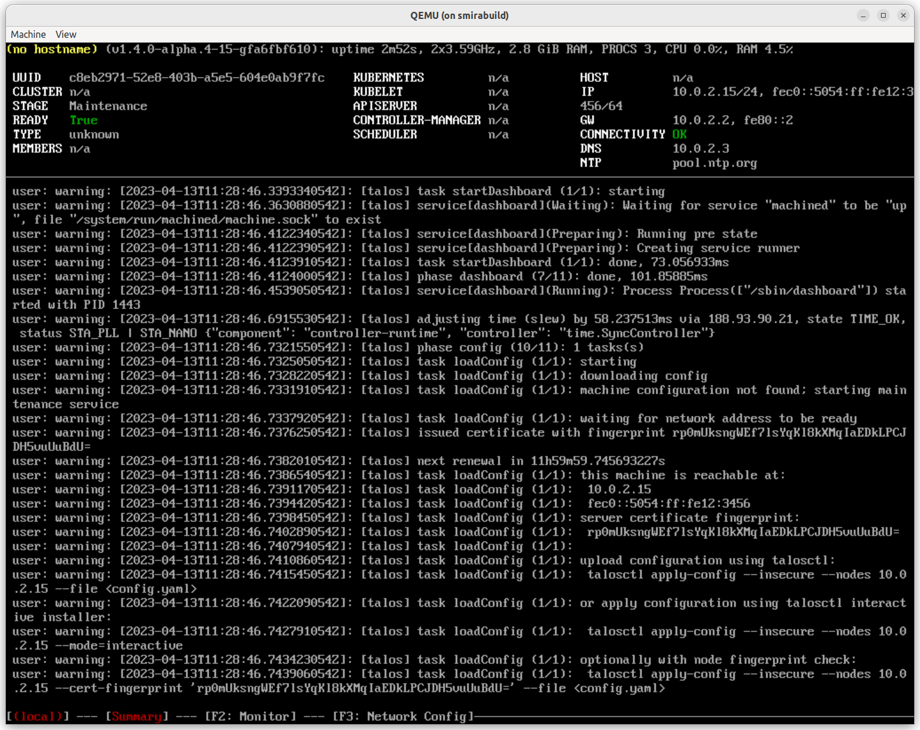

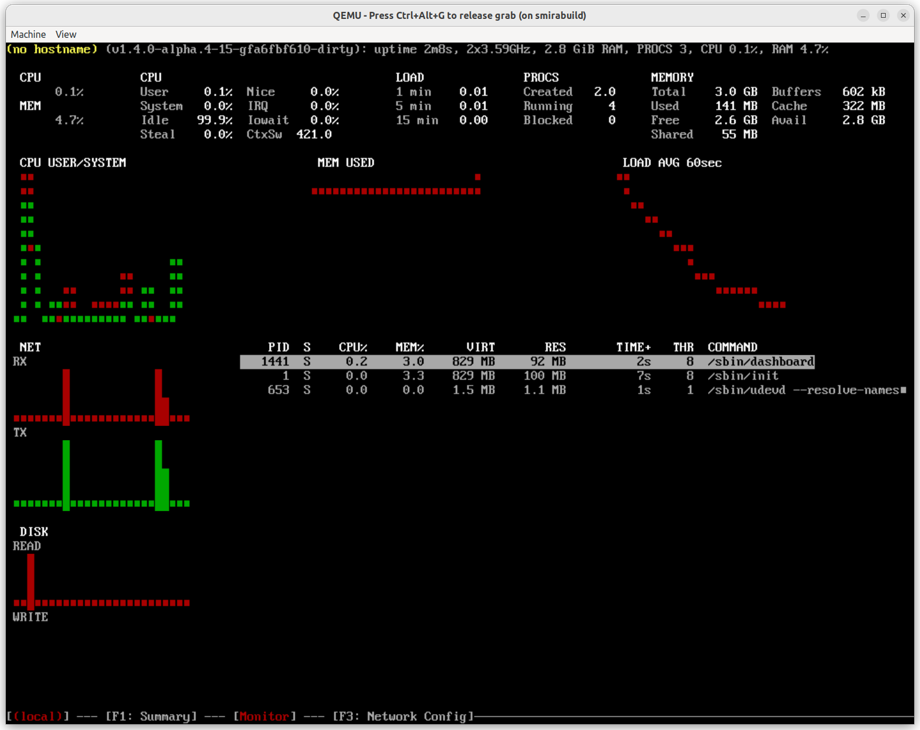

- 6: Interactive Dashboard

- 7: Resetting a Machine

- 8: Upgrading Talos Linux

1 - Installation

1.1 - Bare Metal Platforms

1.1.1 - Digital Rebar

Prerequisites

- 3 nodes (please see hardware requirements)

- Loadbalancer

- Digital Rebar Server

- Talosctl access (see talosctl setup)

Creating a Cluster

In this guide we will create an Kubernetes cluster with 1 worker node, and 2 controlplane nodes. We assume an existing digital rebar deployment, and some familiarity with iPXE.

We leave it up to the user to decide if they would like to use static networking, or DHCP. The setup and configuration of DHCP will not be covered.

Create the Machine Configuration Files

Generating Base Configurations

Using the DNS name of the load balancer, generate the base configuration files for the Talos machines:

$ talosctl gen config talos-k8s-metal-tutorial https://<load balancer IP or DNS>:<port>

created controlplane.yaml

created worker.yaml

created talosconfig

The loadbalancer is used to distribute the load across multiple controlplane nodes. This isn’t covered in detail, because we assume some loadbalancing knowledge before hand. If you think this should be added to the docs, please create a issue.

At this point, you can modify the generated configs to your liking.

Optionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Validate the Configuration Files

$ talosctl validate --config controlplane.yaml --mode metal

controlplane.yaml is valid for metal mode

$ talosctl validate --config worker.yaml --mode metal

worker.yaml is valid for metal mode

Publishing the Machine Configuration Files

Digital Rebar has a built-in fileserver, which means we can use this feature to expose the talos configuration files.

We will place controlplane.yaml, and worker.yaml into Digital Rebar file server by using the drpcli tools.

Copy the generated files from the step above into your Digital Rebar installation.

drpcli file upload <file>.yaml as <file>.yaml

Replacing <file> with controlplane or worker.

Download the boot files

Download a recent version of boot.tar.gz from github.

Upload to DRB:

$ drpcli isos upload boot.tar.gz as talos.tar.gz

{

"Path": "talos.tar.gz",

"Size": 96470072

}

We have some Digital Rebar example files in the Git repo you can use to provision Digital Rebar with drpcli.

To apply these configs you need to create them, and then apply them as follow:

$ drpcli bootenvs create talos

{

"Available": true,

"BootParams": "",

"Bundle": "",

"Description": "",

"Documentation": "",

"Endpoint": "",

"Errors": [],

"Initrds": [],

"Kernel": "",

"Meta": {},

"Name": "talos",

"OS": {

"Codename": "",

"Family": "",

"IsoFile": "",

"IsoSha256": "",

"IsoUrl": "",

"Name": "",

"SupportedArchitectures": {},

"Version": ""

},

"OnlyUnknown": false,

"OptionalParams": [],

"ReadOnly": false,

"RequiredParams": [],

"Templates": [],

"Validated": true

}

drpcli bootenvs update talos - < bootenv.yaml

You need to do this for all files in the example directory. If you don’t have access to the

drpclitools you can also use the webinterface.

It’s important to have a corresponding SHA256 hash matching the boot.tar.gz

Bootenv BootParams

We’re using some of Digital Rebar built in templating to make sure the machine gets the correct role assigned.

talos.platform=metal talos.config={{ .ProvisionerURL }}/files/{{.Param \"talos/role\"}}.yaml"

This is why we also include a params.yaml in the example directory to make sure the role is set to one of the following:

- controlplane

- worker

The {{.Param \"talos/role\"}} then gets populated with one of the above roles.

Boot the Machines

In the UI of Digital Rebar you need to select the machines you want to provision. Once selected, you need to assign to following:

- Profile

- Workflow

This will provision the Stage and Bootenv with the talos values. Once this is done, you can boot the machine.

Bootstrap Etcd

To configure talosctl we will need the first control plane node’s IP:

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint <control plane 1 IP>

talosctl --talosconfig talosconfig config node <control plane 1 IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

1.1.2 - Equinix Metal

You can create a Talos Linux cluster on Equinix Metal in a variety of ways, such as through the EM web UI, the metal command line too, or through PXE booting.

Talos Linux is a supported OS install option on Equinix Metal, so it’s an easy process.

Regardless of the method, the process is:

- Create a DNS entry for your Kubernetes endpoint.

- Generate the configurations using

talosctl. - Provision your machines on Equinix Metal.

- Push the configurations to your servers (if not done as part of the machine provisioning).

- configure your Kubernetes endpoint to point to the newly created control plane nodes

- bootstrap the cluster

Define the Kubernetes Endpoint

There are a variety of ways to create an HA endpoint for the Kubernetes cluster. Some of the ways are:

- DNS

- Load Balancer

- BGP

Whatever way is chosen, it should result in an IP address/DNS name that routes traffic to all the control plane nodes. We do not know the control plane node IP addresses at this stage, but we should define the endpoint DNS entry so that we can use it in creating the cluster configuration. After the nodes are provisioned, we can use their addresses to create the endpoint A records, or bind them to the load balancer, etc.

Create the Machine Configuration Files

Generating Configurations

Using the DNS name of the loadbalancer defined above, generate the base configuration files for the Talos machines:

$ talosctl gen config talos-k8s-em-tutorial https://<load balancer IP or DNS>:<port>

created controlplane.yaml

created worker.yaml

created talosconfig

The

portused above should be 6443, unless your load balancer maps a different port to port 6443 on the control plane nodes.

Validate the Configuration Files

talosctl validate --config controlplane.yaml --mode metal

talosctl validate --config worker.yaml --mode metal

Note: Validation of the install disk could potentially fail as validation is performed on your local machine and the specified disk may not exist.

Passing in the configuration as User Data

You can use the metadata service provide by Equinix Metal to pass in the machines configuration. It is required to add a shebang to the top of the configuration file.

The convention we use is #!talos.

Provision the machines in Equinix Metal

Using the Equinix Metal UI

Simply select the location and type of machines in the Equinix Metal web interface.

Select Talos as the Operating System, then select the number of servers to create, and name them (in lowercase only.)

Under optional settings, you can optionally paste in the contents of controlplane.yaml that was generated, above (ensuring you add a first line of #!talos).

You can repeat this process to create machines of different types for control plane and worker nodes (although you would pass in worker.yaml for the worker nodes, as user data).

If you did not pass in the machine configuration as User Data, you need to provide it to each machine, with the following command:

talosctl apply-config --insecure --nodes <Node IP> --file ./controlplane.yaml

Creating a Cluster via the Equinix Metal CLI

This guide assumes the user has a working API token,and the Equinix Metal CLI installed.

Because Talos Linux is a supported operating system, Talos Linux machines can be provisioned directly via the CLI, using the -O talos_v1 parameter (for Operating System).

Note: Ensure you have prepended

#!talosto thecontrolplane.yamlfile.

metal device create \

--project-id $PROJECT_ID \

--facility $FACILITY \

--operating-system "talos_v1" \

--plan $PLAN\

--hostname $HOSTNAME\

--userdata-file controlplane.yaml

e.g. metal device create -p <projectID> -f da11 -O talos_v1 -P c3.small.x86 -H steve.test.11 --userdata-file ./controlplane.yaml

Repeat this to create each control plane node desired: there should usually be 3 for a HA cluster.

Network Booting via iPXE

You may install Talos over the network using TFTP and iPXE. You would first need a working TFTP and iPXE server.

In general this requires a Talos kernel vmlinuz and initramfs. These assets can be downloaded from a given release.

PXE Boot Kernel Parameters

The following is a list of kernel parameters required by Talos:

talos.platform: set this toequinixMetalinit_on_alloc=1: required by KSPPslab_nomerge: required by KSPPpti=on: required by KSPP

Create the Control Plane Nodes

metal device create \

--project-id $PROJECT_ID \

--facility $FACILITY \

--ipxe-script-url $PXE_SERVER \

--operating-system "custom_ipxe" \

--plan $PLAN\

--hostname $HOSTNAME\

--userdata-file controlplane.yaml

Note: Repeat this to create each control plane node desired: there should usually be 3 for a HA cluster.

Create the Worker Nodes

metal device create \

--project-id $PROJECT_ID \

--facility $FACILITY \

--ipxe-script-url $PXE_SERVER \

--operating-system "custom_ipxe" \

--plan $PLAN\

--hostname $HOSTNAME\

--userdata-file worker.yaml

Update the Kubernetes endpoint

Now our control plane nodes have been created, and we know their IP addresses, we can associate them with the Kubernetes endpoint.

Configure your load balancer to route traffic to these nodes, or add A records to your DNS entry for the endpoint, for each control plane node.

e.g.

host endpoint.mydomain.com

endpoint.mydomain.com has address 145.40.90.201

endpoint.mydomain.com has address 147.75.109.71

endpoint.mydomain.com has address 145.40.90.177

Bootstrap Etcd

Set the endpoints and nodes for talosctl:

talosctl --talosconfig talosconfig config endpoint <control plane 1 IP>

talosctl --talosconfig talosconfig config node <control plane 1 IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

This only needs to be issued to one control plane node.

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

1.1.3 - ISO

Talos can be installed on bare-metal machine using an ISO image.

ISO images for amd64 and arm64 architectures are available on the Talos releases page.

Talos doesn’t install itself to disk when booted from an ISO until the machine configuration is applied.

Please follow the getting started guide for the generic steps on how to install Talos.

Note: If there is already a Talos installation on the disk, the machine will boot into that installation when booting from a Talos ISO. The boot order should prefer disk over ISO, or the ISO should be removed after the installation to make Talos boot from disk.

See kernel parameters reference for the list of kernel parameters supported by Talos.

1.1.4 - Matchbox

Creating a Cluster

In this guide we will create an HA Kubernetes cluster with 3 worker nodes. We assume an existing load balancer, matchbox deployment, and some familiarity with iPXE.

We leave it up to the user to decide if they would like to use static networking, or DHCP. The setup and configuration of DHCP will not be covered.

Create the Machine Configuration Files

Generating Base Configurations

Using the DNS name of the load balancer, generate the base configuration files for the Talos machines:

$ talosctl gen config talos-k8s-metal-tutorial https://<load balancer IP or DNS>:<port>

created controlplane.yaml

created worker.yaml

created talosconfig

At this point, you can modify the generated configs to your liking.

Optionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Validate the Configuration Files

$ talosctl validate --config controlplane.yaml --mode metal

controlplane.yaml is valid for metal mode

$ talosctl validate --config worker.yaml --mode metal

worker.yaml is valid for metal mode

Publishing the Machine Configuration Files

In bare-metal setups it is up to the user to provide the configuration files over HTTP(S).

A special kernel parameter (talos.config) must be used to inform Talos about where it should retrieve its configuration file.

To keep things simple we will place controlplane.yaml, and worker.yaml into Matchbox’s assets directory.

This directory is automatically served by Matchbox.

Create the Matchbox Configuration Files

The profiles we will create will reference vmlinuz, and initramfs.xz.

Download these files from the release of your choice, and place them in /var/lib/matchbox/assets.

Profiles

Control Plane Nodes

{

"id": "control-plane",

"name": "control-plane",

"boot": {

"kernel": "/assets/vmlinuz",

"initrd": ["/assets/initramfs.xz"],

"args": [

"initrd=initramfs.xz",

"init_on_alloc=1",

"slab_nomerge",

"pti=on",

"console=tty0",

"console=ttyS0",

"printk.devkmsg=on",

"talos.platform=metal",

"talos.config=http://matchbox.talos.dev/assets/controlplane.yaml"

]

}

}

Note: Be sure to change

http://matchbox.talos.devto the endpoint of your matchbox server.

Worker Nodes

{

"id": "default",

"name": "default",

"boot": {

"kernel": "/assets/vmlinuz",

"initrd": ["/assets/initramfs.xz"],

"args": [

"initrd=initramfs.xz",

"init_on_alloc=1",

"slab_nomerge",

"pti=on",

"console=tty0",

"console=ttyS0",

"printk.devkmsg=on",

"talos.platform=metal",

"talos.config=http://matchbox.talos.dev/assets/worker.yaml"

]

}

}

Groups

Now, create the following groups, and ensure that the selectors are accurate for your specific setup.

{

"id": "control-plane-1",

"name": "control-plane-1",

"profile": "control-plane",

"selector": {

...

}

}

{

"id": "control-plane-2",

"name": "control-plane-2",

"profile": "control-plane",

"selector": {

...

}

}

{

"id": "control-plane-3",

"name": "control-plane-3",

"profile": "control-plane",

"selector": {

...

}

}

{

"id": "default",

"name": "default",

"profile": "default"

}

Boot the Machines

Now that we have our configuration files in place, boot all the machines. Talos will come up on each machine, grab its configuration file, and bootstrap itself.

Bootstrap Etcd

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint <control plane 1 IP>

talosctl --talosconfig talosconfig config node <control plane 1 IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

1.1.5 - Network Configuration

By default, Talos will run DHCP client on all interfaces which have a link, and that might be enough for most of the cases. If some advanced network configuration is required, it can be done via the machine configuration file.

But sometimes it is required to apply network configuration even before the machine configuration can be fetched from the network.

Kernel Command Line

Talos supports some kernel command line parameters to configure network before the machine configuration is fetched.

Note: Kernel command line parameters are not persisted after Talos installation, so proper network configuration should be done via the machine configuration.

Address, default gateway and DNS servers can be configured via ip= kernel command line parameter:

ip=172.20.0.2::172.20.0.1:255.255.255.0::eth0.100:::::

Bonding can be configured via bond= kernel command line parameter:

bond=bond0:eth0,eth1:balance-rr

VLANs can be configured via vlan= kernel command line parameter:

vlan=eth0.100:eth0

See kernel parameters reference for more details.

Platform Network Configuration

Some platforms (e.g. AWS, Google Cloud, etc.) have their own network configuration mechanisms, which can be used to perform the initial network configuration.

There is no such mechanism for bare-metal platforms, so Talos provides a way to use platform network config on the metal platform to submit the initial network configuration.

The platform network configuration is a YAML document which contains resource specifications for various network resources.

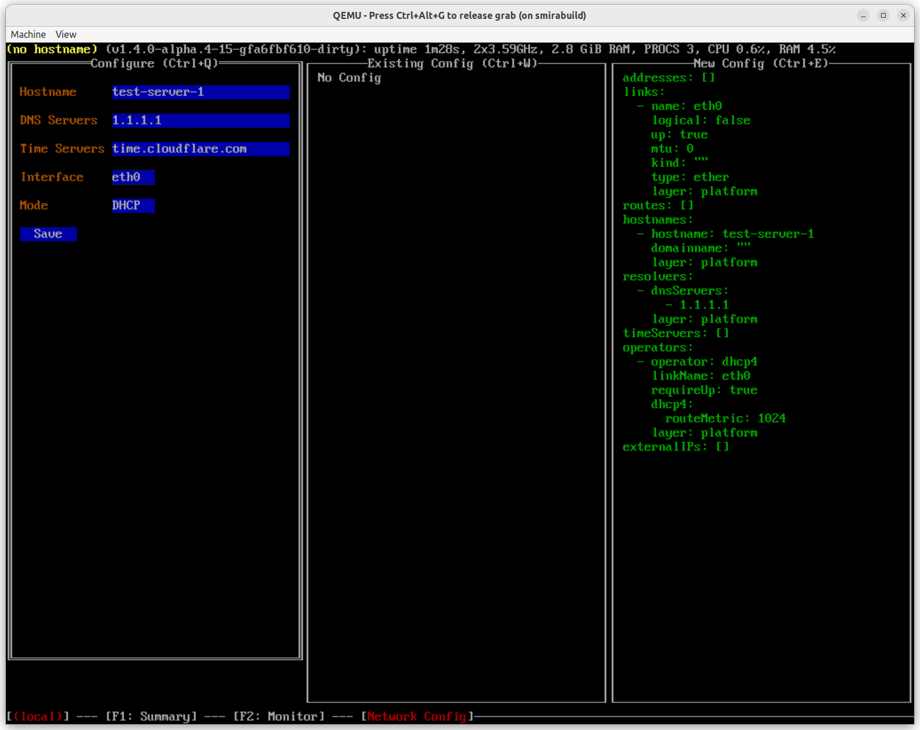

For the metal platform, the interactive dashboard can be used to edit the platform network configuration.

The current value of the platform network configuration can be retrieved using the MetaKeys resource (key 0xa):

talosctl get meta 0xa

The platform network configuration can be updated using the talosctl meta command for the running node:

talosctl meta write 0xa '{"externalIPs": ["1.2.3.4"]}'

talosctl meta delete 0xa

The initial platform network configuration for the metal platform can be also included into the generated Talos image:

docker run --rm -i ghcr.io/siderolabs/imager:v1.4.8 iso --arch amd64 --tar-to-stdout --meta 0xa='{...}' | tar xz

docker run --rm -i --privileged ghcr.io/siderolabs/imager:v1.4.8 image --platform metal --arch amd64 --tar-to-stdout --meta 0xa='{...}' | tar xz

The platform network configuration gets merged with other sources of network configuration, the details can be found in the network resources guide.

1.1.6 - PXE

Talos can be installed on bare-metal using PXE service. There are two more detailed guides for PXE booting using Matchbox and Digital Rebar.

This guide describes generic steps for PXE booting Talos on bare-metal.

First, download the vmlinuz and initramfs assets from the Talos releases page.

Set up the machines to PXE boot from the network (usually by setting the boot order in the BIOS).

There might be options specific to the hardware being used, booting in BIOS or UEFI mode, using iPXE, etc.

Talos requires the following kernel parameters to be set on the initial boot:

talos.platform=metalslab_nomergepti=on

When booted from the network without machine configuration, Talos will start in maintenance mode.

Please follow the getting started guide for the generic steps on how to install Talos.

See kernel parameters reference for the list of kernel parameters supported by Talos.

Note: If there is already a Talos installation on the disk, the machine will boot into that installation when booting from network. The boot order should prefer disk over network.

Talos can automatically fetch the machine configuration from the network on the initial boot using talos.config kernel parameter.

A metadata service (HTTP service) can be implemented to deliver customized configuration to each node for example by using the MAC address of the node:

talos.config=https://metadata.service/talos/config?mac=${mac}

Note: The

talos.configkernel parameter supports other substitution variables, see kernel parameters reference for the full list.

1.1.7 - Sidero Metal

Sidero Metal is a project created by the Talos team that provides a bare metal installer for Cluster API, and that has native support for Talos Linux. It can be easily installed using clusterctl. The best way to get started with Sidero Metal is to visit the website.

1.2 - Virtualized Platforms

1.2.1 - Hyper-V

Pre-requisities

- Download the latest

talos-amd64.isoISO from github releases page - Create a New-TalosVM folder in any of your PS Module Path folders

$env:PSModulePath -split ';'and save the New-TalosVM.psm1 there

Plan Overview

Here we will create a basic 3 node cluster with a single control-plane node and two worker nodes. The only difference between control plane and worker node is the amount of RAM and an additional storage VHD. This is personal preference and can be configured to your liking.

We are using a VMNamePrefix argument for a VM Name prefix and not the full hostname.

This command will find any existing VM with that prefix and “+1” the highest suffix it finds.

For example, if VMs talos-cp01 and talos-cp02 exist, this will create VMs starting from talos-cp03, depending on NumberOfVMs argument.

Setup a Control Plane Node

Use the following command to create a single control plane node:

New-TalosVM -VMNamePrefix talos-cp -CPUCount 2 -StartupMemory 4GB -SwitchName LAB -TalosISOPath C:\ISO\talos-amd64.iso -NumberOfVMs 1 -VMDestinationBasePath 'D:\Virtual Machines\Test VMs\Talos'

This will create talos-cp01 VM and power it on.

Setup Worker Nodes

Use the following command to create 2 worker nodes:

New-TalosVM -VMNamePrefix talos-worker -CPUCount 4 -StartupMemory 8GB -SwitchName LAB -TalosISOPath C:\ISO\talos-amd64.iso -NumberOfVMs 2 -VMDestinationBasePath 'D:\Virtual Machines\Test VMs\Talos' -StorageVHDSize 50GB

This will create two VMs: talos-worker01 and talos-wworker02 and attach an additional VHD of 50GB for storage (which in my case will be passed to Mayastor).

Pushing Config to the Nodes

Now that our VMs are ready, find their IP addresses from console of VM. With that information, push config to the control plane node with:

# set control plane IP variable

$CONTROL_PLANE_IP='10.10.10.x'

# Generate talos config

talosctl gen config talos-cluster https://$($CONTROL_PLANE_IP):6443 --output-dir .

# Apply config to control plane node

talosctl apply-config --insecure --nodes $CONTROL_PLANE_IP --file .\controlplane.yaml

Pushing Config to Worker Nodes

Similarly, for the workers:

talosctl apply-config --insecure --nodes 10.10.10.x --file .\worker.yaml

Apply the config to both nodes.

Bootstrap Cluster

Now that our nodes are ready, we are ready to bootstrap the Kubernetes cluster.

# Use following command to set node and endpoint permanantly in config so you dont have to type it everytime

talosctl config endpoint $CONTROL_PLANE_IP

talosctl config node $CONTROL_PLANE_IP

# Bootstrap cluster

talosctl bootstrap

# Generate kubeconfig

talosctl kubeconfig .

This will generate the kubeconfig file, you can use to connect to the cluster.

1.2.2 - KVM

Talos is known to work on KVM.

We don’t yet have a documented guide specific to KVM; however, you can have a look at our Vagrant & Libvirt guide which uses KVM for virtualization.

If you run into any issues, our community can probably help!

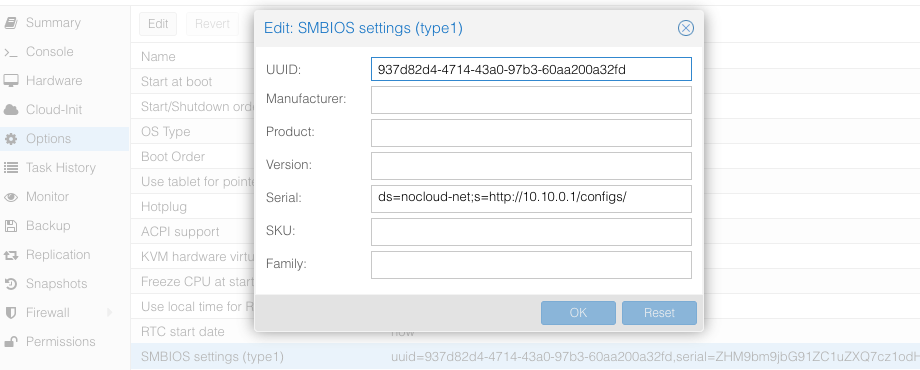

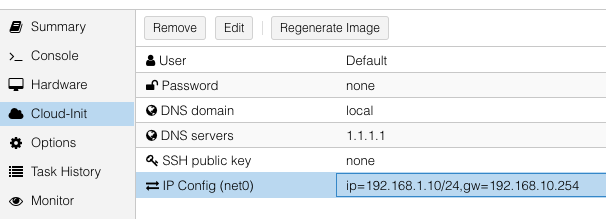

1.2.3 - Proxmox

In this guide we will create a Kubernetes cluster using Proxmox.

Video Walkthrough

To see a live demo of this writeup, visit Youtube here:

Installation

How to Get Proxmox

It is assumed that you have already installed Proxmox onto the server you wish to create Talos VMs on. Visit the Proxmox downloads page if necessary.

Install talosctl

You can download talosctl via

curl -sL https://talos.dev/install | sh

Download ISO Image

In order to install Talos in Proxmox, you will need the ISO image from the Talos release page.

You can download talos-amd64.iso via

github.com/siderolabs/talos/releases

mkdir -p _out/

curl https://github.com/siderolabs/talos/releases/download/<version>/talos-<arch>.iso -L -o _out/talos-<arch>.iso

For example version v1.4.8 for linux platform:

mkdir -p _out/

curl https://github.com/siderolabs/talos/releases/download/v1.4.8/talos-amd64.iso -L -o _out/talos-amd64.iso

Upload ISO

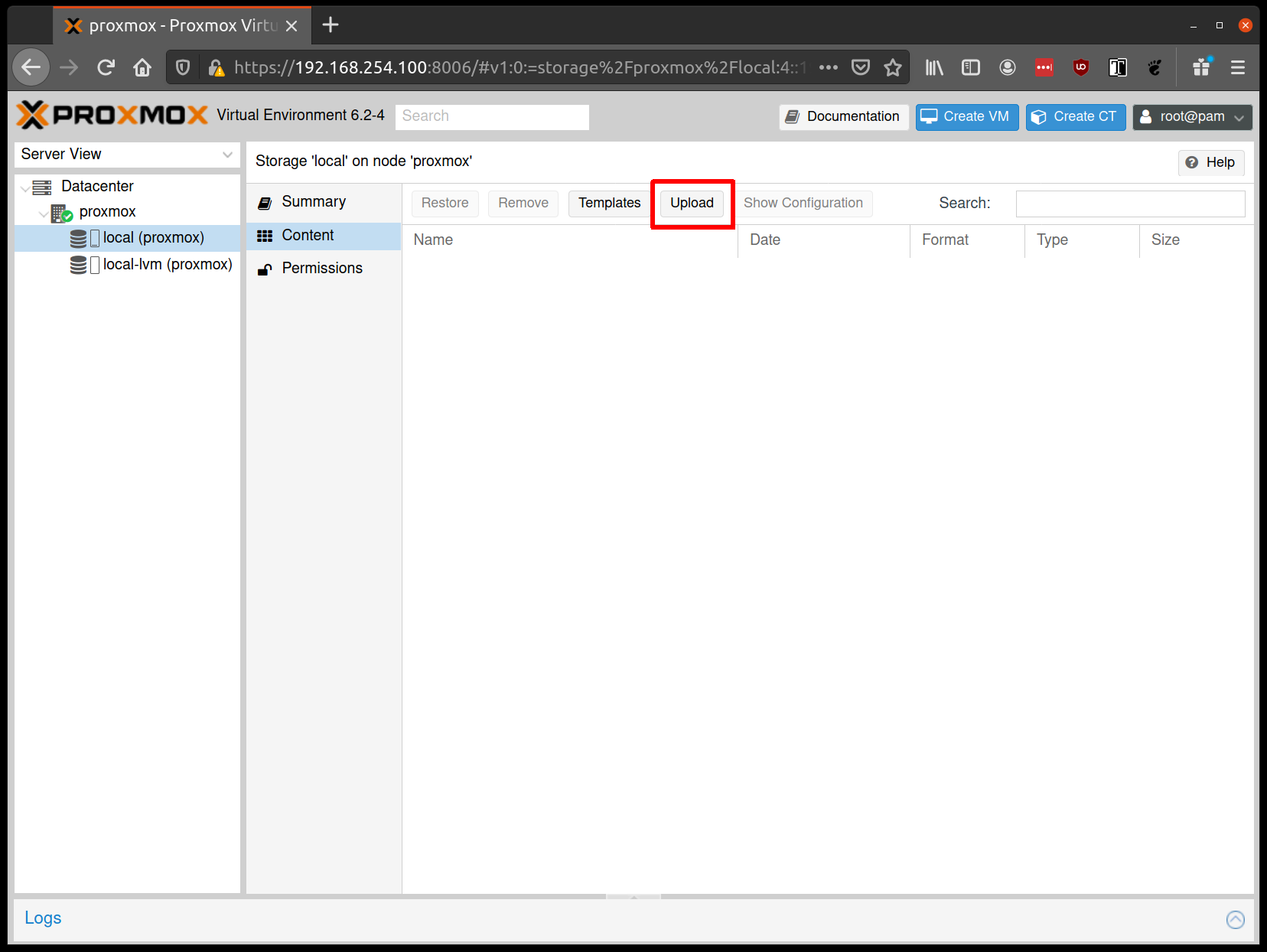

From the Proxmox UI, select the “local” storage and enter the “Content” section. Click the “Upload” button:

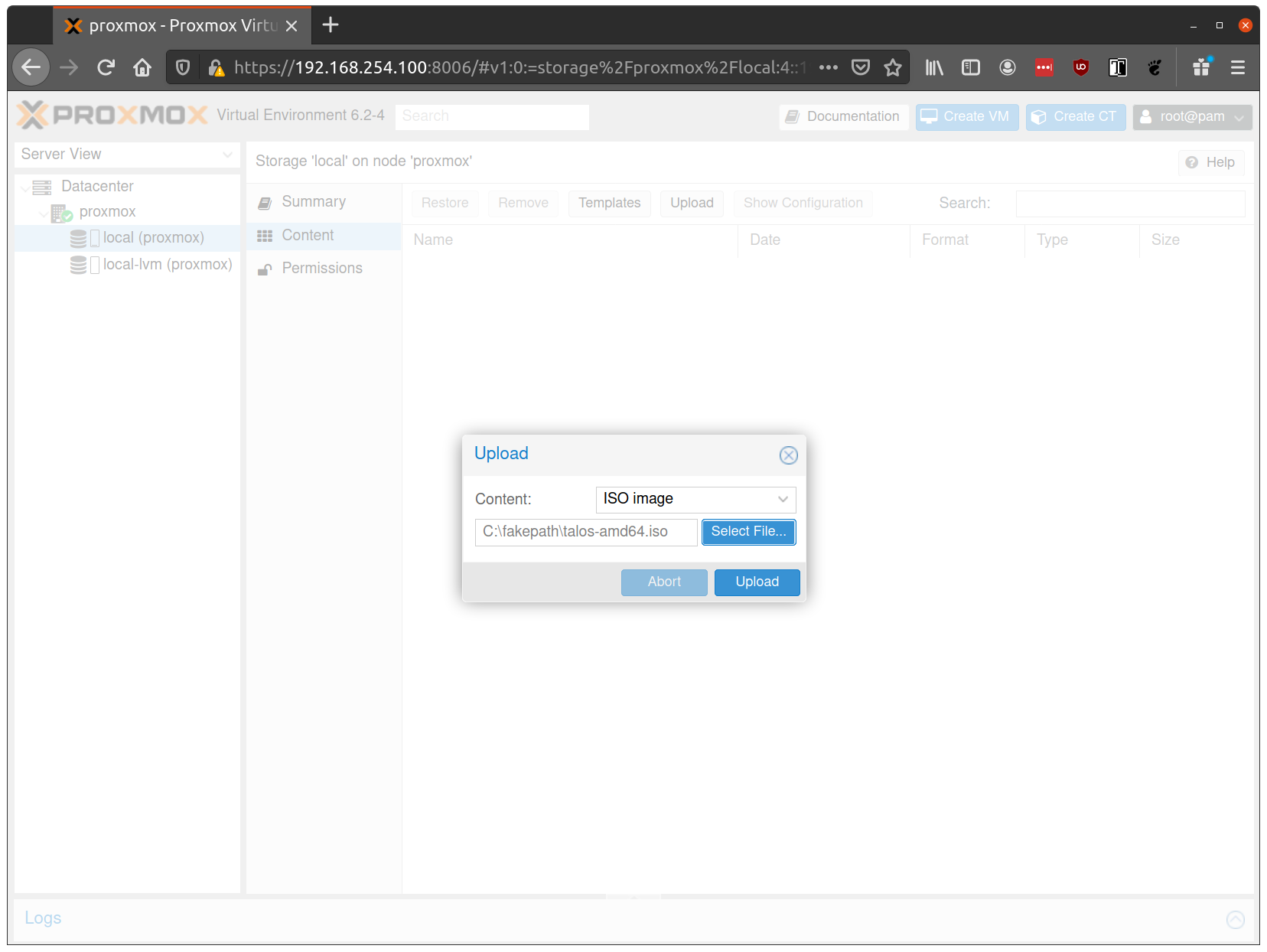

Select the ISO you downloaded previously, then hit “Upload”

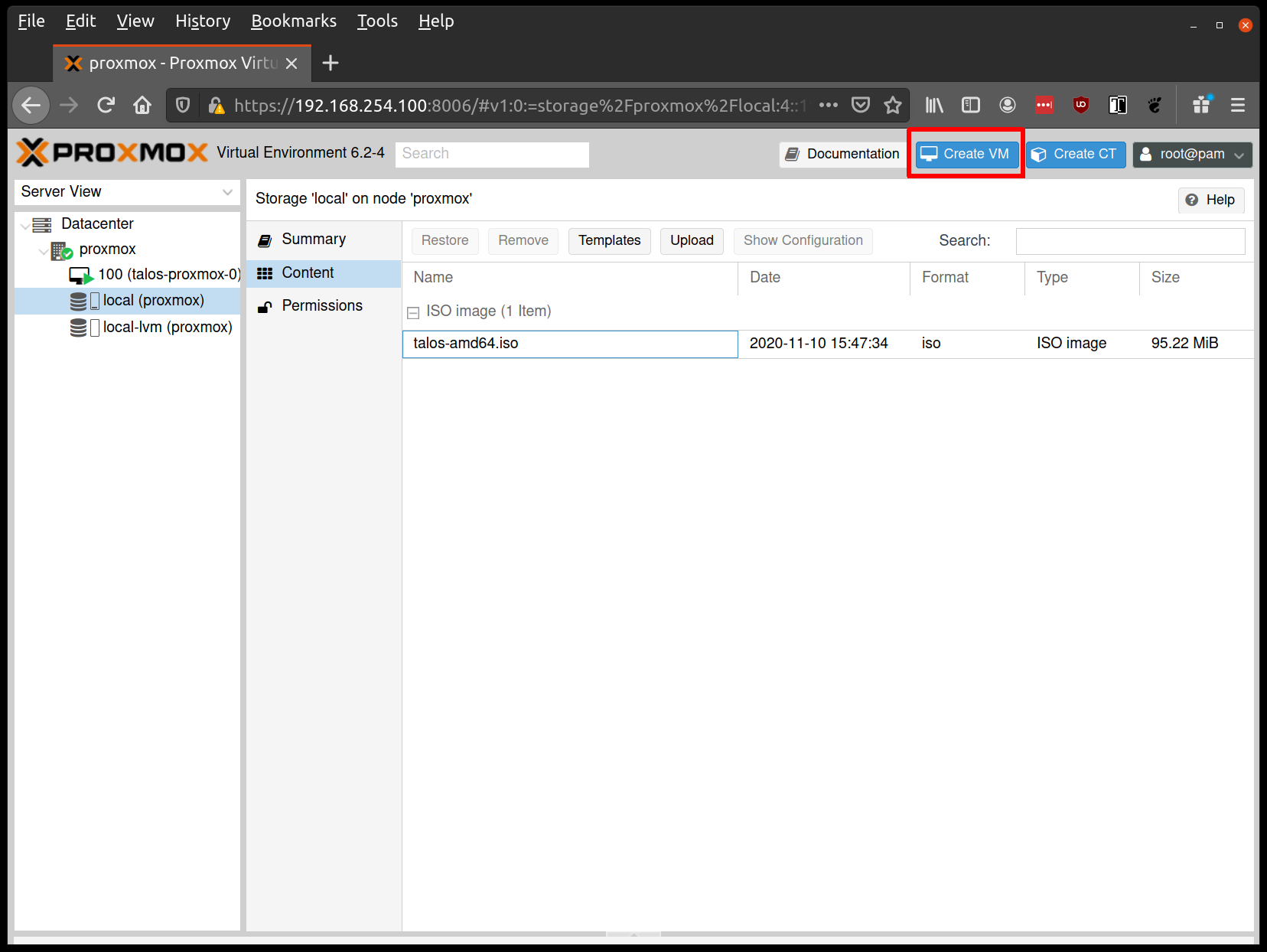

Create VMs

Before starting, familiarise yourself with the system requirements for Talos and assign VM resources accordingly.

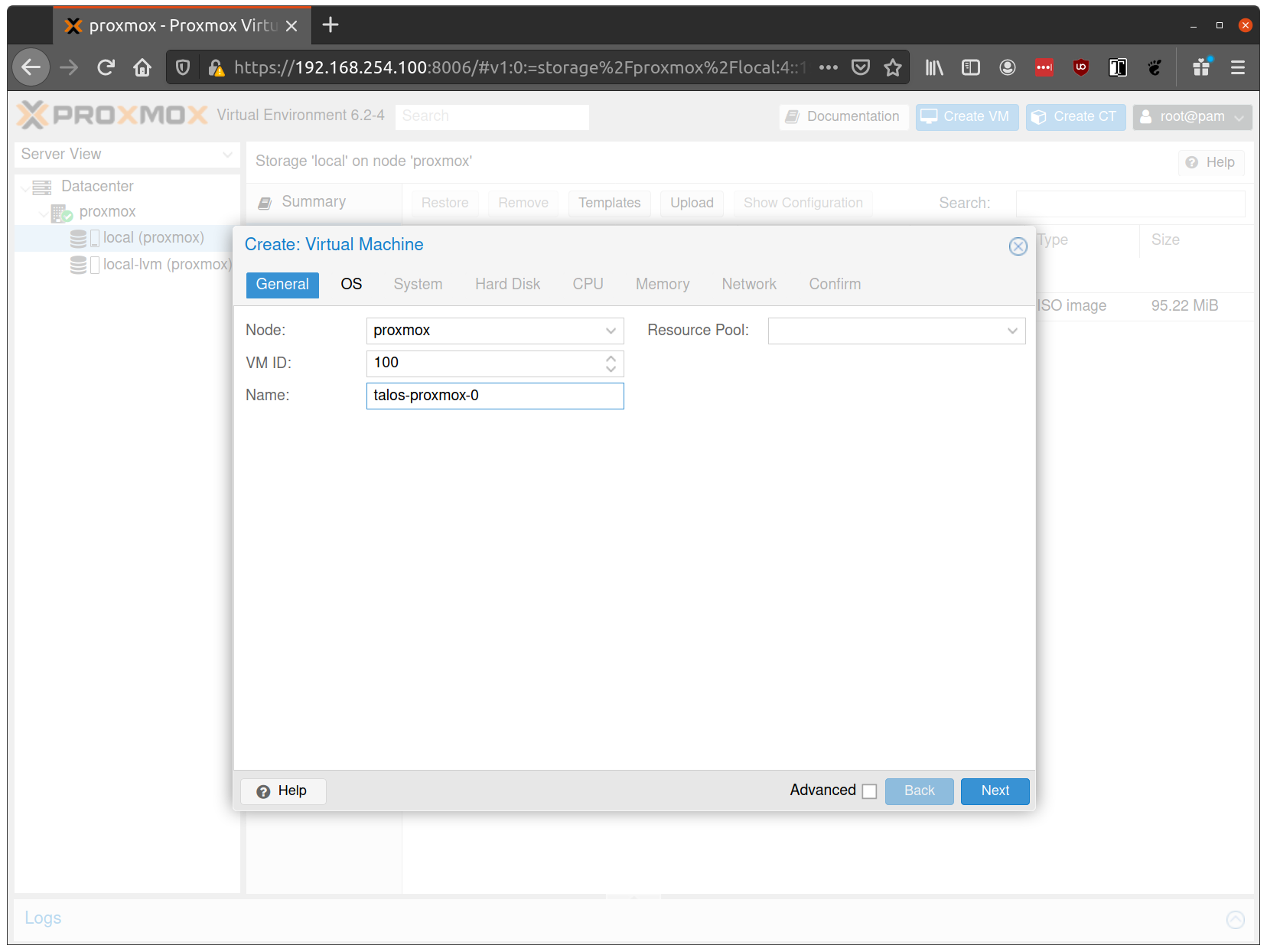

Create a new VM by clicking the “Create VM” button in the Proxmox UI:

Fill out a name for the new VM:

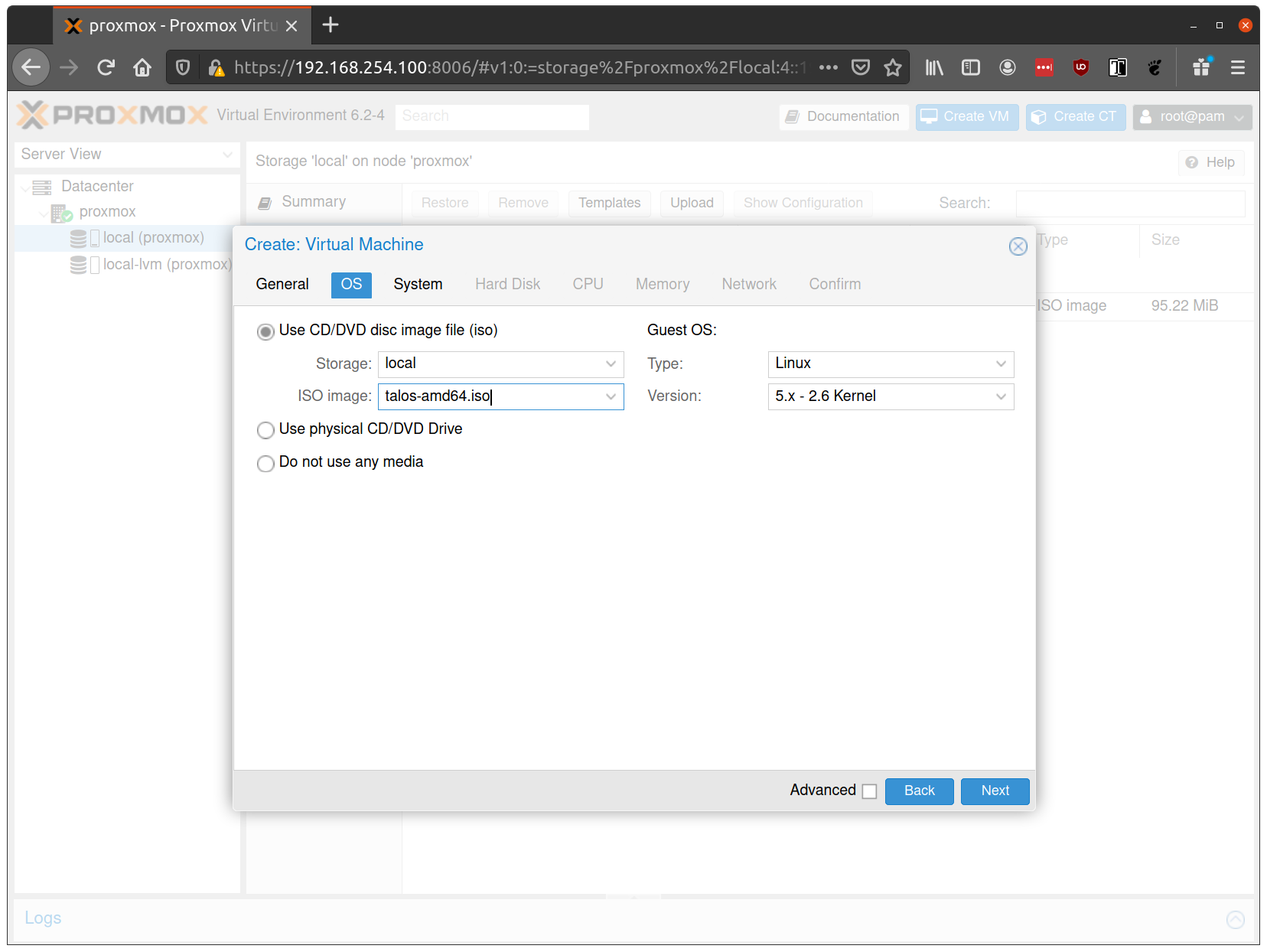

In the OS tab, select the ISO we uploaded earlier:

Keep the defaults set in the “System” tab.

Keep the defaults in the “Hard Disk” tab as well, only changing the size if desired.

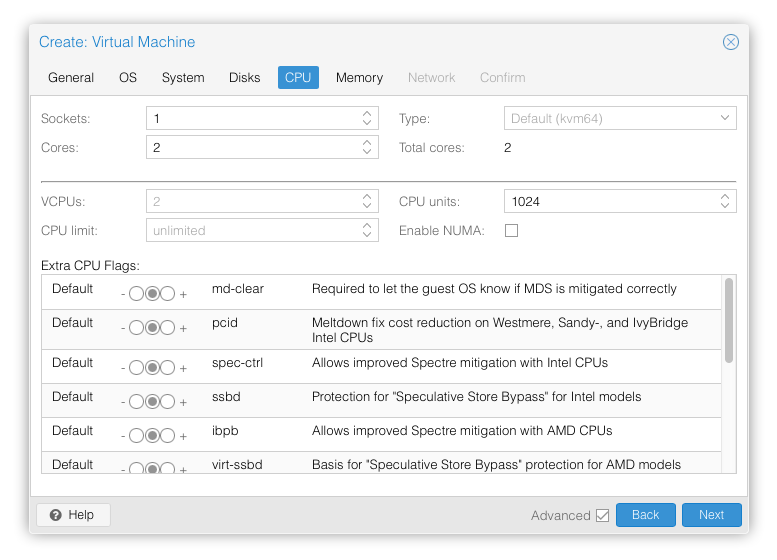

In the “CPU” section, give at least 2 cores to the VM:

Note: As of Talos v1.0 (which requires the x86-64-v2 microarchitecture), prior to Proxmox V8.0, booting with the default Processor Type

kvm64will not work. You can enable the required CPU features after creating the VM by adding the following line in the corresponding/etc/pve/qemu-server/<vmid>.conffile:args: -cpu kvm64,+cx16,+lahf_lm,+popcnt,+sse3,+ssse3,+sse4.1,+sse4.2Alternatively, you can set the Processor Type to

hostif your Proxmox host supports these CPU features, this however prevents using live VM migration.

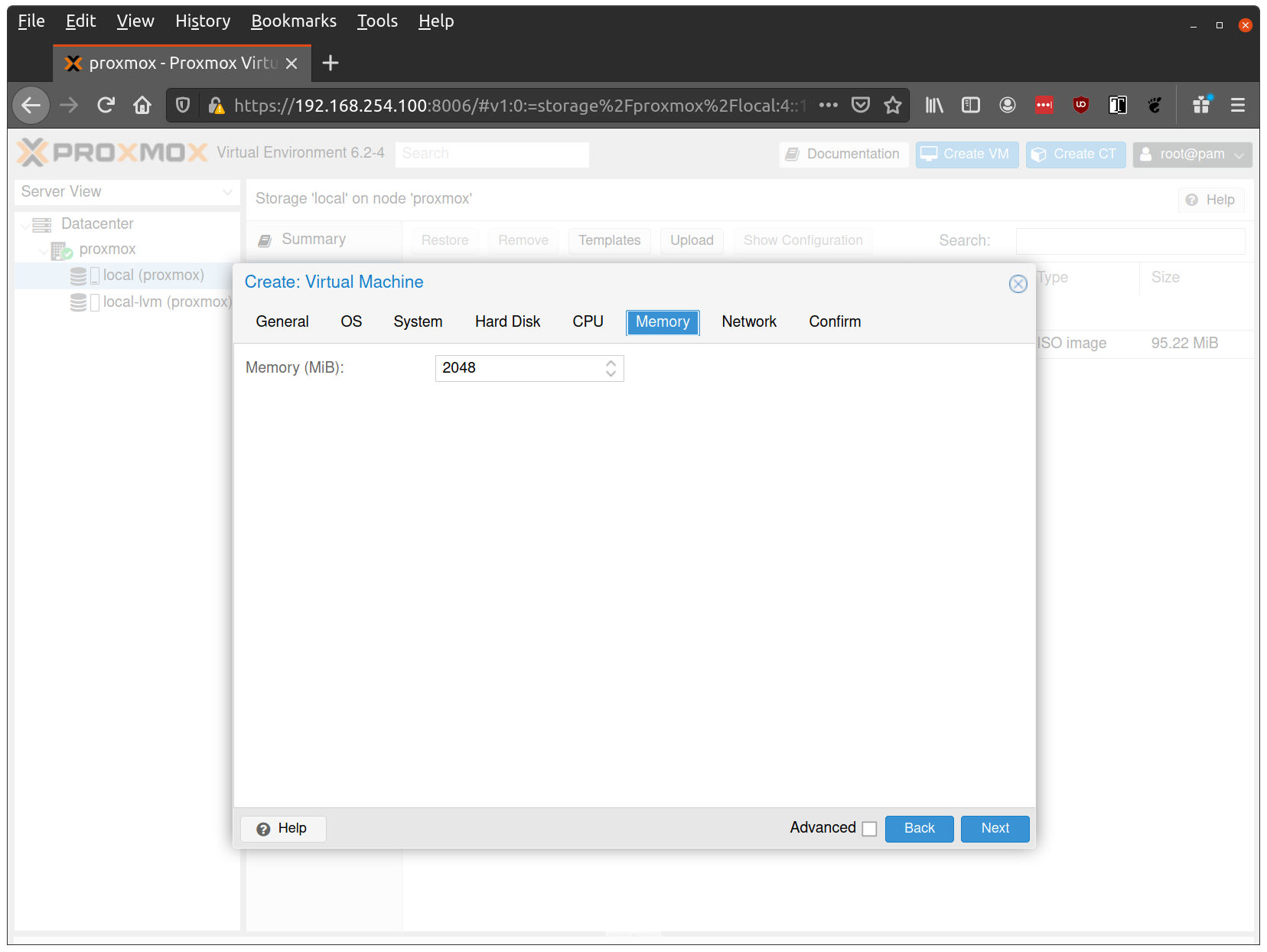

Verify that the RAM is set to at least 2GB:

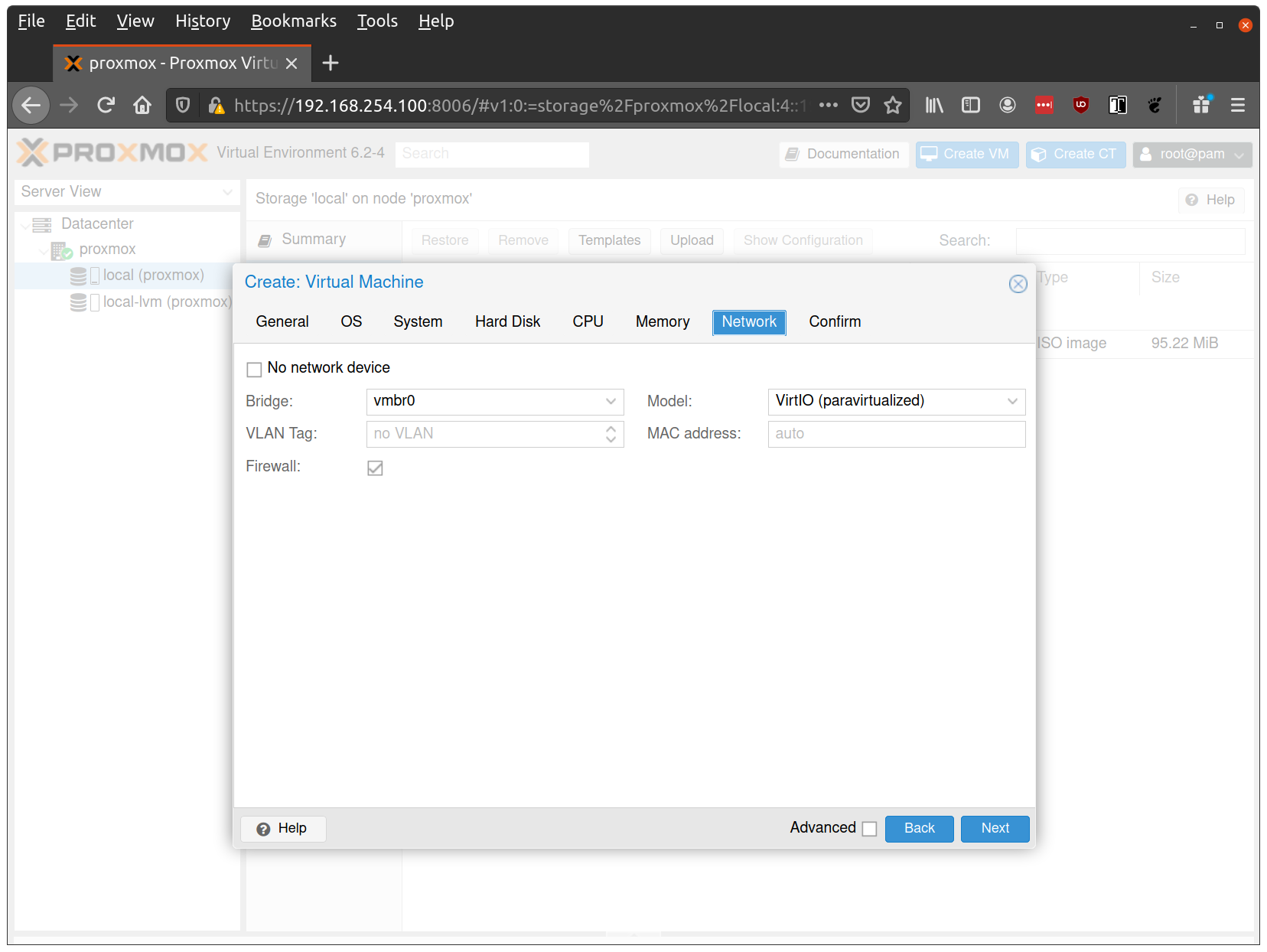

Keep the default values for networking, verifying that the VM is set to come up on the bridge interface:

Finish creating the VM by clicking through the “Confirm” tab and then “Finish”.

Repeat this process for a second VM to use as a worker node. You can also repeat this for additional nodes desired.

Note: Talos doesn’t support memory hot plugging, if creating the VM programmatically don’t enable memory hotplug on your Talos VM’s. Doing so will cause Talos to be unable to see all available memory and have insufficient memory to complete installation of the cluster.

Start Control Plane Node

Once the VMs have been created and updated, start the VM that will be the first control plane node. This VM will boot the ISO image specified earlier and enter “maintenance mode”.

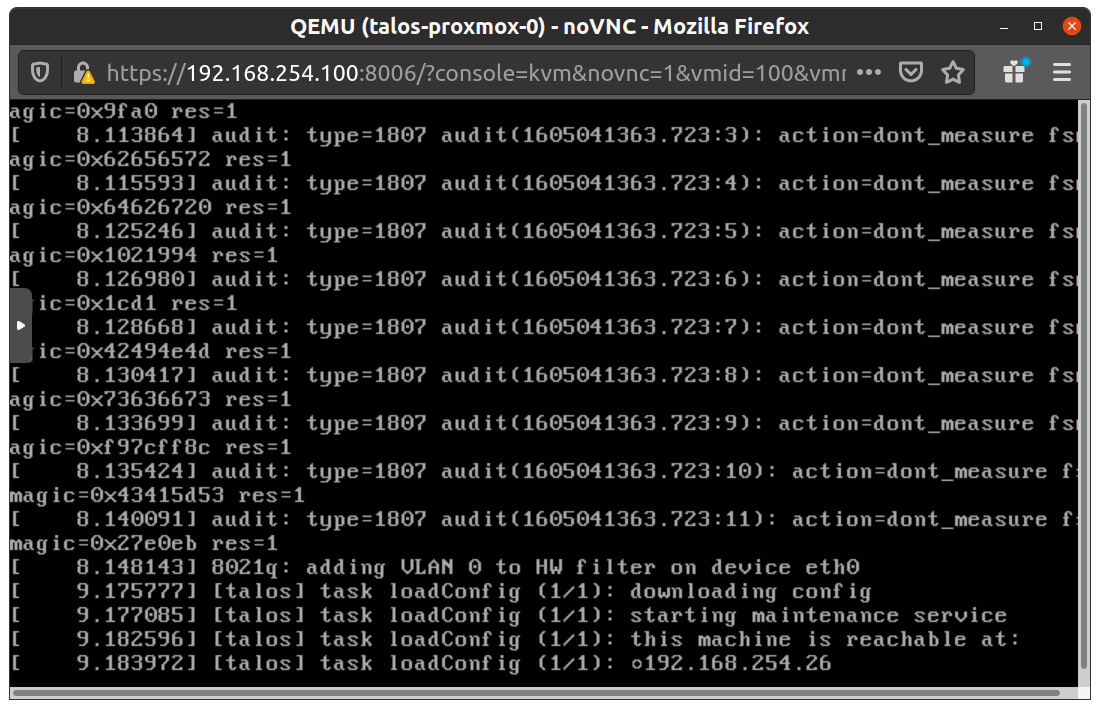

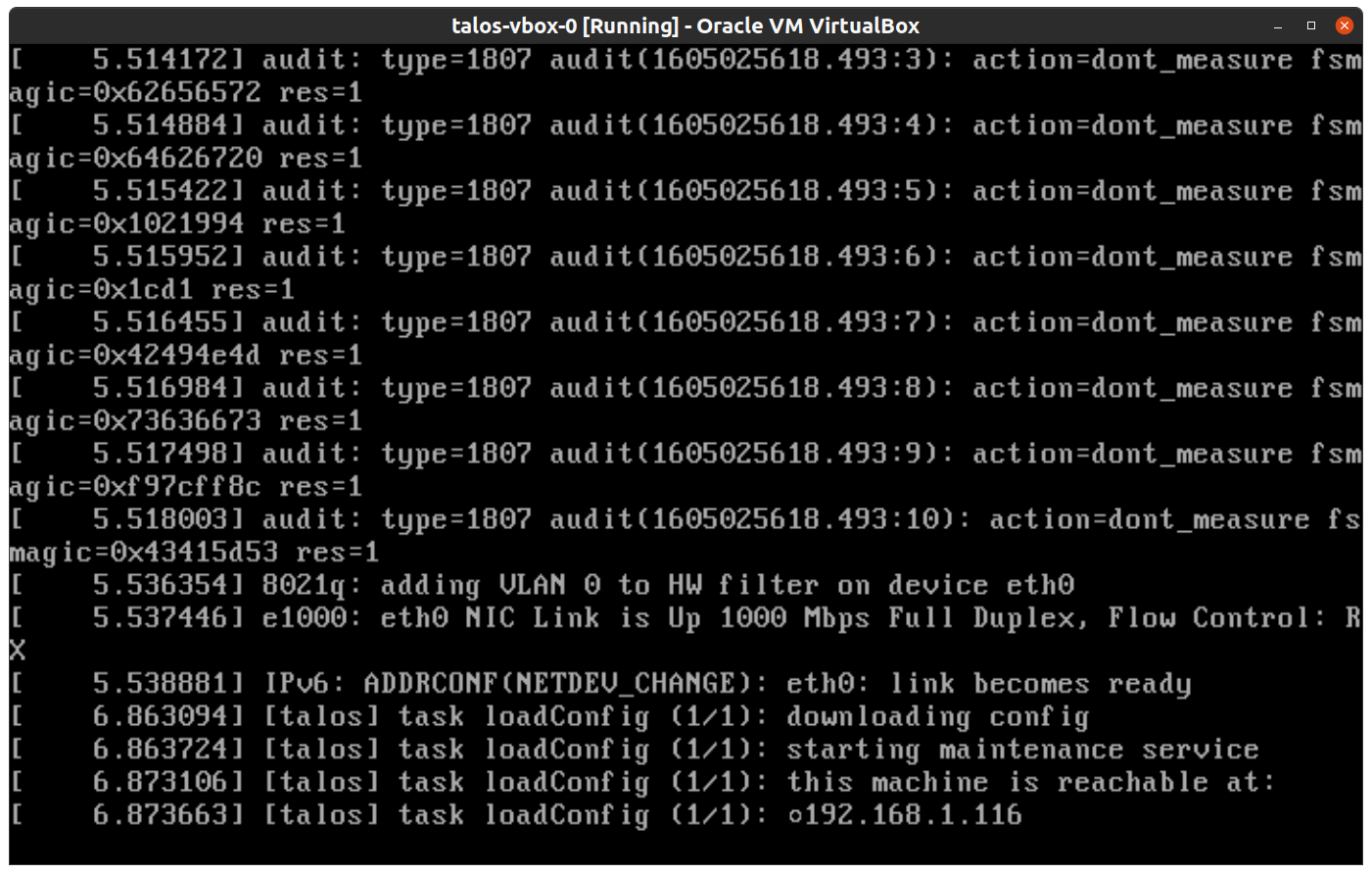

With DHCP server

Once the machine has entered maintenance mode, there will be a console log that details the IP address that the node received.

Take note of this IP address, which will be referred to as $CONTROL_PLANE_IP for the rest of this guide.

If you wish to export this IP as a bash variable, simply issue a command like export CONTROL_PLANE_IP=1.2.3.4.

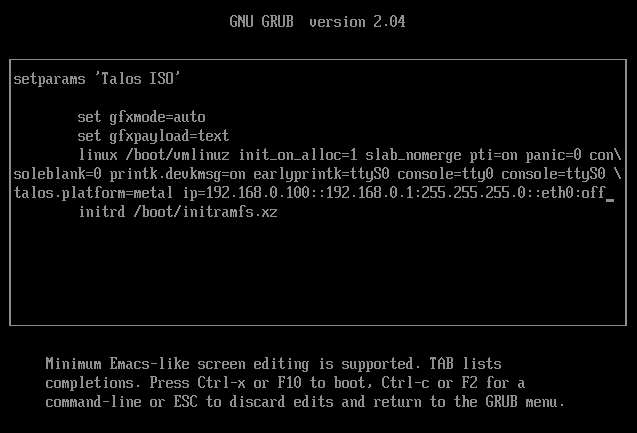

Without DHCP server

To apply the machine configurations in maintenance mode, VM has to have IP on the network. So you can set it on boot time manually.

Press e on the boot time.

And set the IP parameters for the VM.

Format is:

ip=<client-ip>:<srv-ip>:<gw-ip>:<netmask>:<host>:<device>:<autoconf>

For example $CONTROL_PLANE_IP will be 192.168.0.100 and gateway 192.168.0.1

linux /boot/vmlinuz init_on_alloc=1 slab_nomerge pti=on panic=0 consoleblank=0 printk.devkmsg=on earlyprintk=ttyS0 console=tty0 console=ttyS0 talos.platform=metal ip=192.168.0.100::192.168.0.1:255.255.255.0::eth0:off

Then press Ctrl-x or F10

Generate Machine Configurations

With the IP address above, you can now generate the machine configurations to use for installing Talos and Kubernetes. Issue the following command, updating the output directory, cluster name, and control plane IP as you see fit:

talosctl gen config talos-vbox-cluster https://$CONTROL_PLANE_IP:6443 --output-dir _out

This will create several files in the _out directory: controlplane.yaml, worker.yaml, and talosconfig.

Note: The Talos config by default will install to

/dev/sda. Depending on your setup the virtual disk may be mounted differently Eg:/dev/vda. You can check for disks running the following command:talosctl disks --insecure --nodes $CONTROL_PLANE_IPUpdate

controlplane.yamlandworker.yamlconfig files to point to the correct disk location.

Create Control Plane Node

Using the controlplane.yaml generated above, you can now apply this config using talosctl.

Issue:

talosctl apply-config --insecure --nodes $CONTROL_PLANE_IP --file _out/controlplane.yaml

You should now see some action in the Proxmox console for this VM. Talos will be installed to disk, the VM will reboot, and then Talos will configure the Kubernetes control plane on this VM.

Note: This process can be repeated multiple times to create an HA control plane.

Create Worker Node

Create at least a single worker node using a process similar to the control plane creation above.

Start the worker node VM and wait for it to enter “maintenance mode”.

Take note of the worker node’s IP address, which will be referred to as $WORKER_IP

Issue:

talosctl apply-config --insecure --nodes $WORKER_IP --file _out/worker.yaml

Note: This process can be repeated multiple times to add additional workers.

Using the Cluster

Once the cluster is available, you can make use of talosctl and kubectl to interact with the cluster.

For example, to view current running containers, run talosctl containers for a list of containers in the system namespace, or talosctl containers -k for the k8s.io namespace.

To view the logs of a container, use talosctl logs <container> or talosctl logs -k <container>.

First, configure talosctl to talk to your control plane node by issuing the following, updating paths and IPs as necessary:

export TALOSCONFIG="_out/talosconfig"

talosctl config endpoint $CONTROL_PLANE_IP

talosctl config node $CONTROL_PLANE_IP

Bootstrap Etcd

talosctl bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl kubeconfig .

Cleaning Up

To cleanup, simply stop and delete the virtual machines from the Proxmox UI.

1.2.4 - Vagrant & Libvirt

Pre-requisities

- Linux OS

- Vagrant installed

- vagrant-libvirt plugin installed

- talosctl installed

- kubectl installed

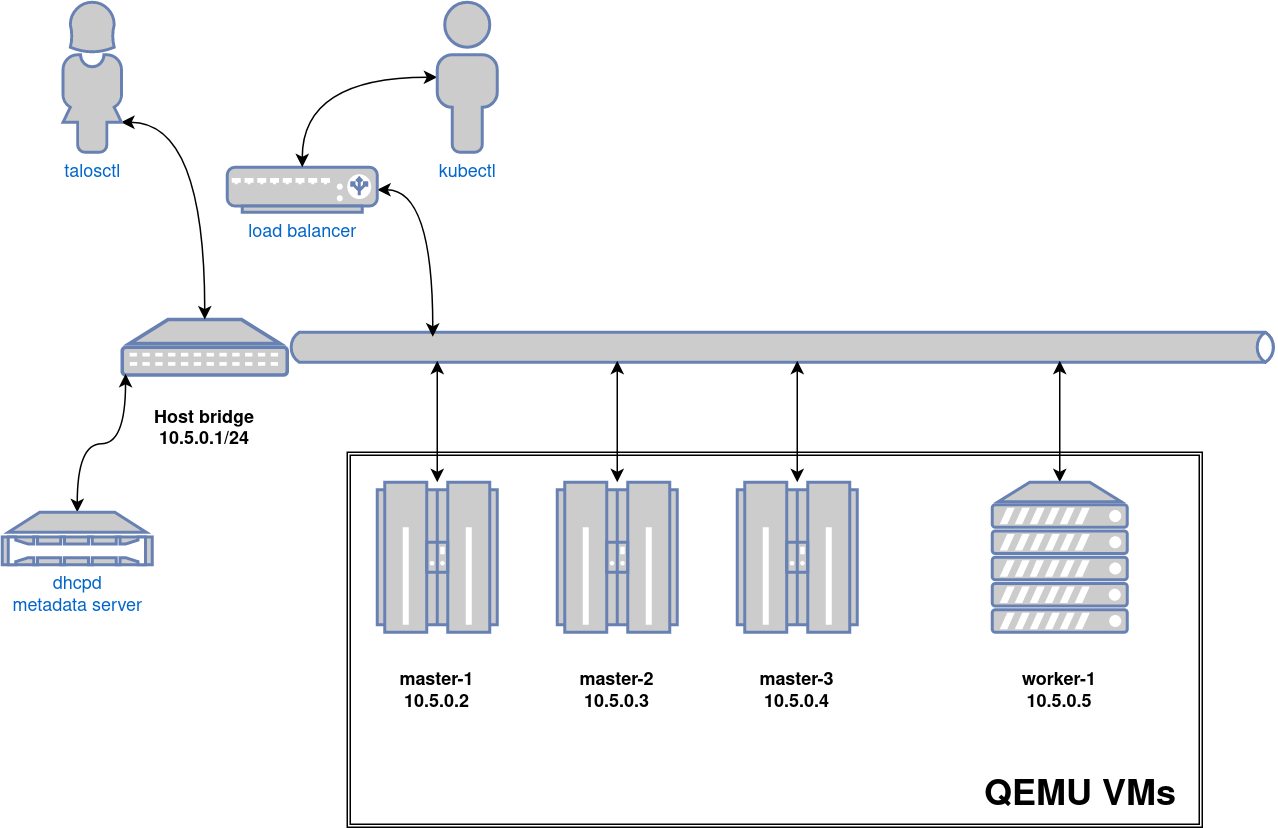

Overview

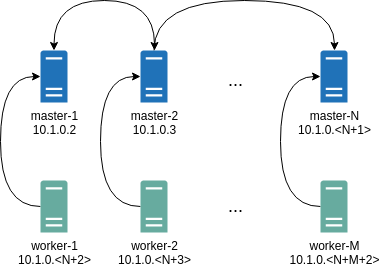

We will use Vagrant and its libvirt plugin to create a KVM-based cluster with 3 control plane nodes and 1 worker node.

For this, we will mount Talos ISO into the VMs using a virtual CD-ROM, and configure the VMs to attempt to boot from the disk first with the fallback to the CD-ROM.

We will also configure a virtual IP address on Talos to achieve high-availability on kube-apiserver.

Preparing the environment

First, we download the latest talos-amd64.iso ISO from GitHub releases into the /tmp directory.

wget --timestamping https://github.com/siderolabs/talos/releases/download/v1.4.8/talos-amd64.iso -O /tmp/talos-amd64.iso

Create a Vagrantfile with the following contents:

Vagrant.configure("2") do |config|

config.vm.define "control-plane-node-1" do |vm|

vm.vm.provider :libvirt do |domain|

domain.cpus = 2

domain.memory = 2048

domain.serial :type => "file", :source => {:path => "/tmp/control-plane-node-1.log"}

domain.storage :file, :device => :cdrom, :path => "/tmp/talos-amd64.iso"

domain.storage :file, :size => '4G', :type => 'raw'

domain.boot 'hd'

domain.boot 'cdrom'

end

end

config.vm.define "control-plane-node-2" do |vm|

vm.vm.provider :libvirt do |domain|

domain.cpus = 2

domain.memory = 2048

domain.serial :type => "file", :source => {:path => "/tmp/control-plane-node-2.log"}

domain.storage :file, :device => :cdrom, :path => "/tmp/talos-amd64.iso"

domain.storage :file, :size => '4G', :type => 'raw'

domain.boot 'hd'

domain.boot 'cdrom'

end

end

config.vm.define "control-plane-node-3" do |vm|

vm.vm.provider :libvirt do |domain|

domain.cpus = 2

domain.memory = 2048

domain.serial :type => "file", :source => {:path => "/tmp/control-plane-node-3.log"}

domain.storage :file, :device => :cdrom, :path => "/tmp/talos-amd64.iso"

domain.storage :file, :size => '4G', :type => 'raw'

domain.boot 'hd'

domain.boot 'cdrom'

end

end

config.vm.define "worker-node-1" do |vm|

vm.vm.provider :libvirt do |domain|

domain.cpus = 1

domain.memory = 1024

domain.serial :type => "file", :source => {:path => "/tmp/worker-node-1.log"}

domain.storage :file, :device => :cdrom, :path => "/tmp/talos-amd64.iso"

domain.storage :file, :size => '4G', :type => 'raw'

domain.boot 'hd'

domain.boot 'cdrom'

end

end

end

Bring up the nodes

Check the status of vagrant VMs:

vagrant status

You should see the VMs in “not created” state:

Current machine states:

control-plane-node-1 not created (libvirt)

control-plane-node-2 not created (libvirt)

control-plane-node-3 not created (libvirt)

worker-node-1 not created (libvirt)

Bring up the vagrant environment:

vagrant up --provider=libvirt

Check the status again:

vagrant status

Now you should see the VMs in “running” state:

Current machine states:

control-plane-node-1 running (libvirt)

control-plane-node-2 running (libvirt)

control-plane-node-3 running (libvirt)

worker-node-1 running (libvirt)

Find out the IP addresses assigned by the libvirt DHCP by running:

virsh list | grep vagrant | awk '{print $2}' | xargs -t -L1 virsh domifaddr

Output will look like the following:

virsh domifaddr vagrant_control-plane-node-2

Name MAC address Protocol Address

-------------------------------------------------------------------------------

vnet0 52:54:00:f9:10:e5 ipv4 192.168.121.119/24

virsh domifaddr vagrant_control-plane-node-1

Name MAC address Protocol Address

-------------------------------------------------------------------------------

vnet1 52:54:00:0f:ae:59 ipv4 192.168.121.203/24

virsh domifaddr vagrant_worker-node-1

Name MAC address Protocol Address

-------------------------------------------------------------------------------

vnet2 52:54:00:6f:28:95 ipv4 192.168.121.69/24

virsh domifaddr vagrant_control-plane-node-3

Name MAC address Protocol Address

-------------------------------------------------------------------------------

vnet3 52:54:00:03:45:10 ipv4 192.168.121.125/24

Our control plane nodes have the IPs: 192.168.121.203, 192.168.121.119, 192.168.121.125 and the worker node has the IP 192.168.121.69.

Now you should be able to interact with Talos nodes that are in maintenance mode:

talosctl -n 192.168.121.203 disks --insecure

Sample output:

DEV MODEL SERIAL TYPE UUID WWID MODALIAS NAME SIZE BUS_PATH

/dev/vda - - HDD - - virtio:d00000002v00001AF4 - 8.6 GB /pci0000:00/0000:00:03.0/virtio0/

Installing Talos

Pick an endpoint IP in the vagrant-libvirt subnet but not used by any nodes, for example 192.168.121.100.

Generate a machine configuration:

talosctl gen config my-cluster https://192.168.121.100:6443 --install-disk /dev/vda

Edit controlplane.yaml to add the virtual IP you picked to a network interface under .machine.network.interfaces, for example:

machine:

network:

interfaces:

- interface: eth0

dhcp: true

vip:

ip: 192.168.121.100

Apply the configuration to the initial control plane node:

talosctl -n 192.168.121.203 apply-config --insecure --file controlplane.yaml

You can tail the logs of the node:

sudo tail -f /tmp/control-plane-node-1.log

Set up your shell to use the generated talosconfig and configure its endpoints (use the IPs of the control plane nodes):

export TALOSCONFIG=$(realpath ./talosconfig)

talosctl config endpoint 192.168.121.203 192.168.121.119 192.168.121.125

Bootstrap the Kubernetes cluster from the initial control plane node:

talosctl -n 192.168.121.203 bootstrap

Finally, apply the machine configurations to the remaining nodes:

talosctl -n 192.168.121.119 apply-config --insecure --file controlplane.yaml

talosctl -n 192.168.121.125 apply-config --insecure --file controlplane.yaml

talosctl -n 192.168.121.69 apply-config --insecure --file worker.yaml

After a while, you should see that all the members have joined:

talosctl -n 192.168.121.203 get members

The output will be like the following:

NODE NAMESPACE TYPE ID VERSION HOSTNAME MACHINE TYPE OS ADDRESSES

192.168.121.203 cluster Member talos-192-168-121-119 1 talos-192-168-121-119 controlplane Talos (v1.1.0) ["192.168.121.119"]

192.168.121.203 cluster Member talos-192-168-121-69 1 talos-192-168-121-69 worker Talos (v1.1.0) ["192.168.121.69"]

192.168.121.203 cluster Member talos-192-168-121-203 6 talos-192-168-121-203 controlplane Talos (v1.1.0) ["192.168.121.100","192.168.121.203"]

192.168.121.203 cluster Member talos-192-168-121-125 1 talos-192-168-121-125 controlplane Talos (v1.1.0) ["192.168.121.125"]

Interacting with Kubernetes cluster

Retrieve the kubeconfig from the cluster:

talosctl -n 192.168.121.203 kubeconfig ./kubeconfig

List the nodes in the cluster:

kubectl --kubeconfig ./kubeconfig get node -owide

You will see an output similar to:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

talos-192-168-121-203 Ready control-plane,master 3m10s v1.24.2 192.168.121.203 <none> Talos (v1.1.0) 5.15.48-talos containerd://1.6.6

talos-192-168-121-69 Ready <none> 2m25s v1.24.2 192.168.121.69 <none> Talos (v1.1.0) 5.15.48-talos containerd://1.6.6

talos-192-168-121-119 Ready control-plane,master 8m46s v1.24.2 192.168.121.119 <none> Talos (v1.1.0) 5.15.48-talos containerd://1.6.6

talos-192-168-121-125 Ready control-plane,master 3m11s v1.24.2 192.168.121.125 <none> Talos (v1.1.0) 5.15.48-talos containerd://1.6.6

Congratulations, you have a highly-available Talos cluster running!

Cleanup

You can destroy the vagrant environment by running:

vagrant destroy -f

And remove the ISO image you downloaded:

sudo rm -f /tmp/talos-amd64.iso

1.2.5 - VMware

Creating a Cluster via the govc CLI

In this guide we will create an HA Kubernetes cluster with 2 worker nodes.

We will use the govc cli which can be downloaded here.

Prereqs/Assumptions

This guide will use the virtual IP (“VIP”) functionality that is built into Talos in order to provide a stable, known IP for the Kubernetes control plane. This simply means the user should pick an IP on their “VM Network” to designate for this purpose and keep it handy for future steps.

Create the Machine Configuration Files

Generating Base Configurations

Using the VIP chosen in the prereq steps, we will now generate the base configuration files for the Talos machines.

This can be done with the talosctl gen config ... command.

Take note that we will also use a JSON6902 patch when creating the configs so that the control plane nodes get some special information about the VIP we chose earlier, as well as a daemonset to install vmware tools on talos nodes.

First, download cp.patch.yaml to your local machine and edit the VIP to match your chosen IP.

You can do this by issuing: curl -fsSLO https://raw.githubusercontent.com/siderolabs/talos/master/website/content/v1.4/talos-guides/install/virtualized-platforms/vmware/cp.patch.yaml.

It’s contents should look like the following:

- op: add

path: /machine/network

value:

interfaces:

- interface: eth0

dhcp: true

vip:

ip: <VIP>

- op: replace

path: /cluster/extraManifests

value:

- "https://raw.githubusercontent.com/mologie/talos-vmtoolsd/master/deploy/unstable.yaml"

With the patch in hand, generate machine configs with:

$ talosctl gen config vmware-test https://<VIP>:<port> --config-patch-control-plane @cp.patch.yaml

created controlplane.yaml

created worker.yaml

created talosconfig

At this point, you can modify the generated configs to your liking if needed.

Optionally, you can specify additional patches by adding to the cp.patch.yaml file downloaded earlier, or create your own patch files.

Validate the Configuration Files

$ talosctl validate --config controlplane.yaml --mode cloud

controlplane.yaml is valid for cloud mode

$ talosctl validate --config worker.yaml --mode cloud

worker.yaml is valid for cloud mode

Set Environment Variables

govc makes use of the following environment variables

export GOVC_URL=<vCenter url>

export GOVC_USERNAME=<vCenter username>

export GOVC_PASSWORD=<vCenter password>

Note: If your vCenter installation makes use of self signed certificates, you’ll want to export

GOVC_INSECURE=true.

There are some additional variables that you may need to set:

export GOVC_DATACENTER=<vCenter datacenter>

export GOVC_RESOURCE_POOL=<vCenter resource pool>

export GOVC_DATASTORE=<vCenter datastore>

export GOVC_NETWORK=<vCenter network>

Choose Install Approach

As part of this guide, we have a more automated install script that handles some of the complexity of importing OVAs and creating VMs. If you wish to use this script, we will detail that next. If you wish to carry out the manual approach, simply skip ahead to the “Manual Approach” section.

Scripted Install

Download the vmware.sh script to your local machine.

You can do this by issuing curl -fsSLO "https://raw.githubusercontent.com/siderolabs/talos/master/website/content/v1.4/talos-guides/install/virtualized-platforms/vmware/vmware.sh".

This script has default variables for things like Talos version and cluster name that may be interesting to tweak before deploying.

Import OVA

To create a content library and import the Talos OVA corresponding to the mentioned Talos version, simply issue:

./vmware.sh upload_ova

Create Cluster

With the OVA uploaded to the content library, you can create a 5 node (by default) cluster with 3 control plane and 2 worker nodes:

./vmware.sh create

This step will create a VM from the OVA, edit the settings based on the env variables used for VM size/specs, then power on the VMs.

You may now skip past the “Manual Approach” section down to “Bootstrap Cluster”.

Manual Approach

Import the OVA into vCenter

A talos.ova asset is published with each release.

We will refer to the version of the release as $TALOS_VERSION below.

It can be easily exported with export TALOS_VERSION="v0.3.0-alpha.10" or similar.

curl -LO https://github.com/siderolabs/talos/releases/download/$TALOS_VERSION/talos.ova

Create a content library (if needed) with:

govc library.create <library name>

Import the OVA to the library with:

govc library.import -n talos-${TALOS_VERSION} <library name> /path/to/downloaded/talos.ova

Create the Bootstrap Node

We’ll clone the OVA to create the bootstrap node (our first control plane node).

govc library.deploy <library name>/talos-${TALOS_VERSION} control-plane-1

Talos makes use of the guestinfo facility of VMware to provide the machine/cluster configuration.

This can be set using the govc vm.change command.

To facilitate persistent storage using the vSphere cloud provider integration with Kubernetes, disk.enableUUID=1 is used.

govc vm.change \

-e "guestinfo.talos.config=$(cat controlplane.yaml | base64)" \

-e "disk.enableUUID=1" \

-vm control-plane-1

Update Hardware Resources for the Bootstrap Node

-cis used to configure the number of cpus-mis used to configure the amount of memory (in MB)

govc vm.change \

-c 2 \

-m 4096 \

-vm control-plane-1

The following can be used to adjust the EPHEMERAL disk size.

govc vm.disk.change -vm control-plane-1 -disk.name disk-1000-0 -size 10G

govc vm.power -on control-plane-1

Create the Remaining Control Plane Nodes

govc library.deploy <library name>/talos-${TALOS_VERSION} control-plane-2

govc vm.change \

-e "guestinfo.talos.config=$(base64 controlplane.yaml)" \

-e "disk.enableUUID=1" \

-vm control-plane-2

govc library.deploy <library name>/talos-${TALOS_VERSION} control-plane-3

govc vm.change \

-e "guestinfo.talos.config=$(base64 controlplane.yaml)" \

-e "disk.enableUUID=1" \

-vm control-plane-3

govc vm.change \

-c 2 \

-m 4096 \

-vm control-plane-2

govc vm.change \

-c 2 \

-m 4096 \

-vm control-plane-3

govc vm.disk.change -vm control-plane-2 -disk.name disk-1000-0 -size 10G

govc vm.disk.change -vm control-plane-3 -disk.name disk-1000-0 -size 10G

govc vm.power -on control-plane-2

govc vm.power -on control-plane-3

Update Settings for the Worker Nodes

govc library.deploy <library name>/talos-${TALOS_VERSION} worker-1

govc vm.change \

-e "guestinfo.talos.config=$(base64 worker.yaml)" \

-e "disk.enableUUID=1" \

-vm worker-1

govc library.deploy <library name>/talos-${TALOS_VERSION} worker-2

govc vm.change \

-e "guestinfo.talos.config=$(base64 worker.yaml)" \

-e "disk.enableUUID=1" \

-vm worker-2

govc vm.change \

-c 4 \

-m 8192 \

-vm worker-1

govc vm.change \

-c 4 \

-m 8192 \

-vm worker-2

govc vm.disk.change -vm worker-1 -disk.name disk-1000-0 -size 10G

govc vm.disk.change -vm worker-2 -disk.name disk-1000-0 -size 10G

govc vm.power -on worker-1

govc vm.power -on worker-2

Bootstrap Cluster

In the vSphere UI, open a console to one of the control plane nodes. You should see some output stating that etcd should be bootstrapped. This text should look like:

"etcd is waiting to join the cluster, if this node is the first node in the cluster, please run `talosctl bootstrap` against one of the following IPs:

Take note of the IP mentioned here and issue:

talosctl --talosconfig talosconfig bootstrap -e <control plane IP> -n <control plane IP>

Keep this IP handy for the following steps as well.

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig config endpoint <control plane IP>

talosctl --talosconfig talosconfig config node <control plane IP>

talosctl --talosconfig talosconfig kubeconfig .

Configure talos-vmtoolsd

The talos-vmtoolsd application was deployed as a daemonset as part of the cluster creation; however, we must now provide a talos credentials file for it to use.

Create a new talosconfig with:

talosctl --talosconfig talosconfig -n <control plane IP> config new vmtoolsd-secret.yaml --roles os:admin

Create a secret from the talosconfig:

kubectl -n kube-system create secret generic talos-vmtoolsd-config \

--from-file=talosconfig=./vmtoolsd-secret.yaml

Clean up the generated file from local system:

rm vmtoolsd-secret.yaml

Once configured, you should now see these daemonset pods go into “Running” state and in vCenter, you will now see IPs and info from the Talos nodes present in the UI.

1.2.6 - Xen

Talos is known to work on Xen. We don’t yet have a documented guide specific to Xen; however, you can follow the General Getting Started Guide. If you run into any issues, our community can probably help!

1.3 - Cloud Platforms

1.3.1 - AWS

Creating a Cluster via the AWS CLI

In this guide we will create an HA Kubernetes cluster with 3 worker nodes. We assume an existing VPC, and some familiarity with AWS. If you need more information on AWS specifics, please see the official AWS documentation.

Set the needed info

Change to your desired region:

REGION="us-west-2"

aws ec2 describe-vpcs --region $REGION

VPC="(the VpcId from the above command)"

Create the Subnet

Use a CIDR block that is present on the VPC specified above.

aws ec2 create-subnet \

--region $REGION \

--vpc-id $VPC \

--cidr-block ${CIDR_BLOCK}

Note the subnet ID that was returned, and assign it to a variable for ease of later use:

SUBNET="(the subnet ID of the created subnet)"

Official AMI Images

Official AMI image ID can be found in the cloud-images.json file attached to the Talos release:

AMI=`curl -sL https://github.com/siderolabs/talos/releases/download/v1.4.8/cloud-images.json | \

jq -r '.[] | select(.region == "'$REGION'") | select (.arch == "amd64") | .id'`

echo $AMI

Replace amd64 in the line above with the desired architecture.

Note the AMI id that is returned is assigned to an environment variable: it will be used later when booting instances.

If using the official AMIs, you can skip to Creating the Security group

Create your own AMIs

The use of the official Talos AMIs are recommended, but if you wish to build your own AMIs, follow the procedure below.

Create the S3 Bucket

aws s3api create-bucket \

--bucket $BUCKET \

--create-bucket-configuration LocationConstraint=$REGION \

--acl private

Create the vmimport Role

In order to create an AMI, ensure that the vmimport role exists as described in the official AWS documentation.

Note that the role should be associated with the S3 bucket we created above.

Create the Image Snapshot

First, download the AWS image from a Talos release:

curl -L https://github.com/siderolabs/talos/releases/download/v1.4.8/aws-amd64.tar.gz | tar -xv

Copy the RAW disk to S3 and import it as a snapshot:

aws s3 cp disk.raw s3://$BUCKET/talos-aws-tutorial.raw

aws ec2 import-snapshot \

--region $REGION \

--description "Talos kubernetes tutorial" \

--disk-container "Format=raw,UserBucket={S3Bucket=$BUCKET,S3Key=talos-aws-tutorial.raw}"

Save the SnapshotId, as we will need it once the import is done.

To check on the status of the import, run:

aws ec2 describe-import-snapshot-tasks \

--region $REGION \

--import-task-ids

Once the SnapshotTaskDetail.Status indicates completed, we can register the image.

Register the Image

aws ec2 register-image \

--region $REGION \

--block-device-mappings "DeviceName=/dev/xvda,VirtualName=talos,Ebs={DeleteOnTermination=true,SnapshotId=$SNAPSHOT,VolumeSize=4,VolumeType=gp2}" \

--root-device-name /dev/xvda \

--virtualization-type hvm \

--architecture x86_64 \

--ena-support \

--name talos-aws-tutorial-ami

We now have an AMI we can use to create our cluster. Save the AMI ID, as we will need it when we create EC2 instances.

AMI="(AMI ID of the register image command)"

Create a Security Group

aws ec2 create-security-group \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--description "Security Group for EC2 instances to allow ports required by Talos"

SECURITY_GROUP="(security group id that is returned)"

Using the security group from above, allow all internal traffic within the same security group:

aws ec2 authorize-security-group-ingress \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--protocol all \

--port 0 \

--source-group talos-aws-tutorial-sg

and expose the Talos and Kubernetes APIs:

aws ec2 authorize-security-group-ingress \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--protocol tcp \

--port 6443 \

--cidr 0.0.0.0/0

aws ec2 authorize-security-group-ingress \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--protocol tcp \

--port 50000-50001 \

--cidr 0.0.0.0/0

Create a Load Balancer

aws elbv2 create-load-balancer \

--region $REGION \

--name talos-aws-tutorial-lb \

--type network --subnets $SUBNET

Take note of the DNS name and ARN. We will need these soon.

LOAD_BALANCER_ARN="(arn of the load balancer)"

aws elbv2 create-target-group \

--region $REGION \

--name talos-aws-tutorial-tg \

--protocol TCP \

--port 6443 \

--target-type ip \

--vpc-id $VPC

Also note the TargetGroupArn that is returned.

TARGET_GROUP_ARN="(target group arn)"

Create the Machine Configuration Files

Using the DNS name of the loadbalancer created earlier, generate the base configuration files for the Talos machines.

Note that the

portused here is the externally accessible port configured on the load balancer - 443 - not the internal port of 6443:

$ talosctl gen config talos-k8s-aws-tutorial https://<load balancer DNS>:<port> --with-examples=false --with-docs=false

created controlplane.yaml

created worker.yaml

created talosconfig

Note that the generated configs are too long for AWS userdata field if the

--with-examplesand--with-docsflags are not passed.

At this point, you can modify the generated configs to your liking.

Optionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Validate the Configuration Files

$ talosctl validate --config controlplane.yaml --mode cloud

controlplane.yaml is valid for cloud mode

$ talosctl validate --config worker.yaml --mode cloud

worker.yaml is valid for cloud mode

Create the EC2 Instances

change the instance type if desired. Note: There is a known issue that prevents Talos from running on T2 instance types. Please use T3 if you need burstable instance types.

Create the Control Plane Nodes

CP_COUNT=1

while [[ "$CP_COUNT" -lt 4 ]]; do

aws ec2 run-instances \

--region $REGION \

--image-id $AMI \

--count 1 \

--instance-type t3.small \

--user-data file://controlplane.yaml \

--subnet-id $SUBNET \

--security-group-ids $SECURITY_GROUP \

--associate-public-ip-address \

--tag-specifications "ResourceType=instance,Tags=[{Key=Name,Value=talos-aws-tutorial-cp-$CP_COUNT}]"

((CP_COUNT++))

done

Make a note of the resulting

PrivateIpAddressfrom the controlplane nodes for later use.

Create the Worker Nodes

aws ec2 run-instances \

--region $REGION \

--image-id $AMI \

--count 3 \

--instance-type t3.small \

--user-data file://worker.yaml \

--subnet-id $SUBNET \

--security-group-ids $SECURITY_GROUP

--tag-specifications "ResourceType=instance,Tags=[{Key=Name,Value=talos-aws-tutorial-worker}]"

Configure the Load Balancer

Now, using the load balancer target group’s ARN, and the PrivateIpAddress from the controlplane instances that you created :

aws elbv2 register-targets \

--region $REGION \

--target-group-arn $TARGET_GROUP_ARN \

--targets Id=$CP_NODE_1_IP Id=$CP_NODE_2_IP Id=$CP_NODE_3_IP

Using the ARNs of the load balancer and target group from previous steps, create the listener:

aws elbv2 create-listener \

--region $REGION \

--load-balancer-arn $LOAD_BALANCER_ARN \

--protocol TCP \

--port 443 \

--default-actions Type=forward,TargetGroupArn=$TARGET_GROUP_ARN

Bootstrap Etcd

Set the endpoints (the control plane node to which talosctl commands are sent) and nodes (the nodes that the command operates on):

talosctl --talosconfig talosconfig config endpoint <control plane 1 PUBLIC IP>

talosctl --talosconfig talosconfig config node <control plane 1 PUBLIC IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

The different control plane nodes should sendi/receive traffic via the load balancer, notice that one of the control plane has intiated the etcd cluster, and the others should join. You can now watch as your cluster bootstraps, by using

talosctl --talosconfig talosconfig health

You can also watch the performance of a node, via:

talosctl --talosconfig talosconfig dashboard

And use standard kubectl commands.

1.3.2 - Azure

Creating a Cluster via the CLI

In this guide we will create an HA Kubernetes cluster with 1 worker node. We assume existing Blob Storage, and some familiarity with Azure. If you need more information on Azure specifics, please see the official Azure documentation.

Environment Setup

We’ll make use of the following environment variables throughout the setup. Edit the variables below with your correct information.

# Storage account to use

export STORAGE_ACCOUNT="StorageAccountName"

# Storage container to upload to

export STORAGE_CONTAINER="StorageContainerName"

# Resource group name

export GROUP="ResourceGroupName"

# Location

export LOCATION="centralus"

# Get storage account connection string based on info above

export CONNECTION=$(az storage account show-connection-string \

-n $STORAGE_ACCOUNT \

-g $GROUP \

-o tsv)

Create the Image

First, download the Azure image from a Talos release.

Once downloaded, untar with tar -xvf /path/to/azure-amd64.tar.gz

Upload the VHD

Once you have pulled down the image, you can upload it to blob storage with:

az storage blob upload \

--connection-string $CONNECTION \

--container-name $STORAGE_CONTAINER \

-f /path/to/extracted/talos-azure.vhd \

-n talos-azure.vhd

Register the Image

Now that the image is present in our blob storage, we’ll register it.

az image create \

--name talos \

--source https://$STORAGE_ACCOUNT.blob.core.windows.net/$STORAGE_CONTAINER/talos-azure.vhd \

--os-type linux \

-g $GROUP

Network Infrastructure

Virtual Networks and Security Groups

Once the image is prepared, we’ll want to work through setting up the network. Issue the following to create a network security group and add rules to it.

# Create vnet

az network vnet create \

--resource-group $GROUP \

--location $LOCATION \

--name talos-vnet \

--subnet-name talos-subnet

# Create network security group

az network nsg create -g $GROUP -n talos-sg

# Client -> apid

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n apid \

--priority 1001 \

--destination-port-ranges 50000 \

--direction inbound

# Trustd

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n trustd \

--priority 1002 \

--destination-port-ranges 50001 \

--direction inbound

# etcd

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n etcd \

--priority 1003 \

--destination-port-ranges 2379-2380 \

--direction inbound

# Kubernetes API Server

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n kube \

--priority 1004 \

--destination-port-ranges 6443 \

--direction inbound

Load Balancer

We will create a public ip, load balancer, and a health check that we will use for our control plane.

# Create public ip

az network public-ip create \

--resource-group $GROUP \

--name talos-public-ip \

--allocation-method static

# Create lb

az network lb create \

--resource-group $GROUP \

--name talos-lb \

--public-ip-address talos-public-ip \

--frontend-ip-name talos-fe \

--backend-pool-name talos-be-pool

# Create health check

az network lb probe create \

--resource-group $GROUP \

--lb-name talos-lb \

--name talos-lb-health \

--protocol tcp \

--port 6443

# Create lb rule for 6443

az network lb rule create \

--resource-group $GROUP \

--lb-name talos-lb \

--name talos-6443 \

--protocol tcp \

--frontend-ip-name talos-fe \

--frontend-port 6443 \

--backend-pool-name talos-be-pool \

--backend-port 6443 \

--probe-name talos-lb-health

Network Interfaces

In Azure, we have to pre-create the NICs for our control plane so that they can be associated with our load balancer.

for i in $( seq 0 1 2 ); do

# Create public IP for each nic

az network public-ip create \

--resource-group $GROUP \

--name talos-controlplane-public-ip-$i \

--allocation-method static

# Create nic

az network nic create \

--resource-group $GROUP \

--name talos-controlplane-nic-$i \

--vnet-name talos-vnet \

--subnet talos-subnet \

--network-security-group talos-sg \

--public-ip-address talos-controlplane-public-ip-$i\

--lb-name talos-lb \

--lb-address-pools talos-be-pool

done

# NOTES:

# Talos can detect PublicIPs automatically if PublicIP SKU is Basic.

# Use `--sku Basic` to set SKU to Basic.

Cluster Configuration

With our networking bits setup, we’ll fetch the IP for our load balancer and create our configuration files.

LB_PUBLIC_IP=$(az network public-ip show \

--resource-group $GROUP \

--name talos-public-ip \

--query [ipAddress] \

--output tsv)

talosctl gen config talos-k8s-azure-tutorial https://${LB_PUBLIC_IP}:6443

Compute Creation

We are now ready to create our azure nodes.

Azure allows you to pass Talos machine configuration to the virtual machine at bootstrap time via

user-data or custom-data methods.

Talos supports only custom-data method, machine configuration is available to the VM only on the first boot.

# Create availability set

az vm availability-set create \

--name talos-controlplane-av-set \

-g $GROUP

# Create the controlplane nodes

for i in $( seq 0 1 2 ); do

az vm create \

--name talos-controlplane-$i \

--image talos \

--custom-data ./controlplane.yaml \

-g $GROUP \

--admin-username talos \

--generate-ssh-keys \

--verbose \

--boot-diagnostics-storage $STORAGE_ACCOUNT \

--os-disk-size-gb 20 \

--nics talos-controlplane-nic-$i \

--availability-set talos-controlplane-av-set \

--no-wait

done

# Create worker node

az vm create \

--name talos-worker-0 \

--image talos \

--vnet-name talos-vnet \

--subnet talos-subnet \

--custom-data ./worker.yaml \

-g $GROUP \

--admin-username talos \

--generate-ssh-keys \

--verbose \

--boot-diagnostics-storage $STORAGE_ACCOUNT \

--nsg talos-sg \

--os-disk-size-gb 20 \

--no-wait

# NOTES:

# `--admin-username` and `--generate-ssh-keys` are required by the az cli,

# but are not actually used by talos

# `--os-disk-size-gb` is the backing disk for Kubernetes and any workload containers

# `--boot-diagnostics-storage` is to enable console output which may be necessary

# for troubleshooting

Bootstrap Etcd

You should now be able to interact with your cluster with talosctl.

We will need to discover the public IP for our first control plane node first.

CONTROL_PLANE_0_IP=$(az network public-ip show \

--resource-group $GROUP \

--name talos-controlplane-public-ip-0 \

--query [ipAddress] \

--output tsv)

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint $CONTROL_PLANE_0_IP

talosctl --talosconfig talosconfig config node $CONTROL_PLANE_0_IP

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

1.3.3 - DigitalOcean

Creating a Talos Linux Cluster on Digital Ocean via the CLI

In this guide we will create an HA Kubernetes cluster with 1 worker node, in the NYC region. We assume an existing Space, and some familiarity with DigitalOcean. If you need more information on DigitalOcean specifics, please see the official DigitalOcean documentation.

Create the Image

Download the DigitalOcean image digital-ocean-amd64.raw.gz from the latest Talos release.

Note: the minimum version of Talos required to support Digital Ocean is v1.3.3.

Using an upload method of your choice (doctl does not have Spaces support), upload the image to a space.

(It’s easy to drag the image file to the space using DigitalOcean’s web console.)

Note: Make sure you upload the file as public.

Now, create an image using the URL of the uploaded image:

export REGION=nyc3

doctl compute image create \

--region $REGION \

--image-description talos-digital-ocean-tutorial \

--image-url https://$SPACENAME.$REGION.digitaloceanspaces.com/digital-ocean-amd64.raw.gz \

Talos

Save the image ID. We will need it when creating droplets.

Create a Load Balancer

doctl compute load-balancer create \

--region $REGION \

--name talos-digital-ocean-tutorial-lb \

--tag-name talos-digital-ocean-tutorial-control-plane \

--health-check protocol:tcp,port:6443,check_interval_seconds:10,response_timeout_seconds:5,healthy_threshold:5,unhealthy_threshold:3 \

--forwarding-rules entry_protocol:tcp,entry_port:443,target_protocol:tcp,target_port:6443

Note the returned ID of the load balancer.

We will need the IP of the load balancer. Using the ID of the load balancer, run:

doctl compute load-balancer get --format IP <load balancer ID>

Note that it may take a few minutes before the load balancer is provisioned, so repeat this command until it returns with the IP address.

Create the Machine Configuration Files

Using the IP address (or DNS name, if you have created one) of the loadbalancer, generate the base configuration files for the Talos machines. Also note that the load balancer forwards port 443 to port 6443 on the associated nodes, so we should use 443 as the port in the config definition:

$ talosctl gen config talos-k8s-digital-ocean-tutorial https://<load balancer IP or DNS>:443

created controlplane.yaml

created worker.yaml

created talosconfig

Create the Droplets

Create a dummy SSH key

Although SSH is not used by Talos, DigitalOcean requires that an SSH key be associated with a droplet during creation. We will create a dummy key that can be used to satisfy this requirement.

doctl compute ssh-key create --public-key "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDbl0I1s/yOETIKjFr7mDLp8LmJn6OIZ68ILjVCkoN6lzKmvZEqEm1YYeWoI0xgb80hQ1fKkl0usW6MkSqwrijoUENhGFd6L16WFL53va4aeJjj2pxrjOr3uBFm/4ATvIfFTNVs+VUzFZ0eGzTgu1yXydX8lZMWnT4JpsMraHD3/qPP+pgyNuI51LjOCG0gVCzjl8NoGaQuKnl8KqbSCARIpETg1mMw+tuYgaKcbqYCMbxggaEKA0ixJ2MpFC/kwm3PcksTGqVBzp3+iE5AlRe1tnbr6GhgT839KLhOB03j7lFl1K9j1bMTOEj5Io8z7xo/XeF2ZQKHFWygAJiAhmKJ dummy@dummy.local" dummy

Note the ssh key ID that is returned - we will use it in creating the droplets.

Create the Control Plane Nodes

Run the following commands to create three control plane nodes:

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--tag-names talos-digital-ocean-tutorial-control-plane \

--user-data-file controlplane.yaml \

--ssh-keys <ssh key ID> \

talos-control-plane-1

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--tag-names talos-digital-ocean-tutorial-control-plane \

--user-data-file controlplane.yaml \

--ssh-keys <ssh key ID> \

talos-control-plane-2

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--tag-names talos-digital-ocean-tutorial-control-plane \

--user-data-file controlplane.yaml \

--ssh-keys <ssh key ID> \

talos-control-plane-3

Note the droplet ID returned for the first control plane node.

Create the Worker Nodes

Run the following to create a worker node:

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--user-data-file worker.yaml \

--ssh-keys <ssh key ID> \

talos-worker-1

Bootstrap Etcd

To configure talosctl we will need the first control plane node’s IP:

doctl compute droplet get --format PublicIPv4 <droplet ID>

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint <control plane 1 IP>

talosctl --talosconfig talosconfig config node <control plane 1 IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

We can also watch the cluster bootstrap via:

talosctl --talosconfig talosconfig health

1.3.4 - Exoscale

Talos is known to work on exoscale.com; however, it is currently undocumented.

1.3.5 - GCP

Creating a Cluster via the CLI

In this guide, we will create an HA Kubernetes cluster in GCP with 1 worker node. We will assume an existing Cloud Storage bucket, and some familiarity with Google Cloud. If you need more information on Google Cloud specifics, please see the official Google documentation.

jq and talosctl also needs to be installed

Manual Setup

Environment Setup

We’ll make use of the following environment variables throughout the setup. Edit the variables below with your correct information.

# Storage account to use

export STORAGE_BUCKET="StorageBucketName"

# Region

export REGION="us-central1"

Create the Image

First, download the Google Cloud image from a Talos release.

These images are called gcp-$ARCH.tar.gz.

Upload the Image

Once you have downloaded the image, you can upload it to your storage bucket with:

gsutil cp /path/to/gcp-amd64.tar.gz gs://$STORAGE_BUCKET

Register the image

Now that the image is present in our bucket, we’ll register it.

gcloud compute images create talos \

--source-uri=gs://$STORAGE_BUCKET/gcp-amd64.tar.gz \

--guest-os-features=VIRTIO_SCSI_MULTIQUEUE

Network Infrastructure

Load Balancers and Firewalls

Once the image is prepared, we’ll want to work through setting up the network. Issue the following to create a firewall, load balancer, and their required components.

130.211.0.0/22 and 35.191.0.0/16 are the GCP Load Balancer IP ranges

# Create Instance Group

gcloud compute instance-groups unmanaged create talos-ig \

--zone $REGION-b

# Create port for IG

gcloud compute instance-groups set-named-ports talos-ig \

--named-ports tcp6443:6443 \

--zone $REGION-b

# Create health check

gcloud compute health-checks create tcp talos-health-check --port 6443

# Create backend

gcloud compute backend-services create talos-be \

--global \

--protocol TCP \

--health-checks talos-health-check \

--timeout 5m \

--port-name tcp6443

# Add instance group to backend

gcloud compute backend-services add-backend talos-be \

--global \

--instance-group talos-ig \

--instance-group-zone $REGION-b

# Create tcp proxy

gcloud compute target-tcp-proxies create talos-tcp-proxy \

--backend-service talos-be \

--proxy-header NONE

# Create LB IP

gcloud compute addresses create talos-lb-ip --global

# Forward 443 from LB IP to tcp proxy

gcloud compute forwarding-rules create talos-fwd-rule \

--global \

--ports 443 \

--address talos-lb-ip \

--target-tcp-proxy talos-tcp-proxy

# Create firewall rule for health checks

gcloud compute firewall-rules create talos-controlplane-firewall \

--source-ranges 130.211.0.0/22,35.191.0.0/16 \

--target-tags talos-controlplane \

--allow tcp:6443

# Create firewall rule to allow talosctl access

gcloud compute firewall-rules create talos-controlplane-talosctl \

--source-ranges 0.0.0.0/0 \

--target-tags talos-controlplane \

--allow tcp:50000

Cluster Configuration

With our networking bits setup, we’ll fetch the IP for our load balancer and create our configuration files.

LB_PUBLIC_IP=$(gcloud compute forwarding-rules describe talos-fwd-rule \

--global \

--format json \

| jq -r .IPAddress)

talosctl gen config talos-k8s-gcp-tutorial https://${LB_PUBLIC_IP}:443

Additionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Compute Creation

We are now ready to create our GCP nodes.

# Create the control plane nodes.

for i in $( seq 1 3 ); do

gcloud compute instances create talos-controlplane-$i \

--image talos \

--zone $REGION-b \

--tags talos-controlplane \

--boot-disk-size 20GB \

--metadata-from-file=user-data=./controlplane.yaml

--tags talos-controlplane-$i

done

# Add control plane nodes to instance group

for i in $( seq 1 3 ); do

gcloud compute instance-groups unmanaged add-instances talos-ig \

--zone $REGION-b \

--instances talos-controlplane-$i

done

# Create worker

gcloud compute instances create talos-worker-0 \

--image talos \

--zone $REGION-b \

--boot-disk-size 20GB \

--metadata-from-file=user-data=./worker.yaml

--tags talos-worker-$i

Bootstrap Etcd

You should now be able to interact with your cluster with talosctl.

We will need to discover the public IP for our first control plane node first.

CONTROL_PLANE_0_IP=$(gcloud compute instances describe talos-controlplane-0 \

--zone $REGION-b \

--format json \

| jq -r '.networkInterfaces[0].accessConfigs[0].natIP')

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint $CONTROL_PLANE_0_IP

talosctl --talosconfig talosconfig config node $CONTROL_PLANE_0_IP

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

Cleanup

# cleanup VM's

gcloud compute instances delete \

talos-worker-0 \

talos-controlplane-0 \

talos-controlplane-1 \

talos-controlplane-2

# cleanup firewall rules

gcloud compute firewall-rules delete \

talos-controlplane-talosctl \

talos-controlplane-firewall

# cleanup forwarding rules

gcloud compute forwarding-rules delete \

talos-fwd-rule

# cleanup addresses

gcloud compute addresses delete \

talos-lb-ip

# cleanup proxies

gcloud compute target-tcp-proxies delete \

talos-tcp-proxy

# cleanup backend services

gcloud compute backend-services delete \

talos-be

# cleanup health checks

gcloud compute health-checks delete \

talos-health-check