This is the multi-page printable view of this section. Click here to print.

Learn More

- 1: Philosophy

- 2: Concepts

- 3: Architecture

- 4: Components

- 5: Upgrades

- 6: FAQs

- 7: talosctl

1 - Philosophy

Distributed

Talos is intended to be operated in a distributed manner.

That is, it is built for a high-availability dataplane first.

Its etcd cluster is built in an ad-hoc manner, with each appointed node joining on its own directive (with proper security validations enforced, of course).

Like as kubernetes itself, workloads are intended to be distributed across any number of compute nodes.

There should be no single points of failure, and the level of required coordination is as low as each platform allows.

Immutable

Talos takes immutability very seriously. Talos itself, even when installed on a disk, always runs from a SquashFS image, meaning that even if a directory is mounted to be writable, the image itself is never modified. All images are signed and delivered as single, versioned files. We can always run integrity checks on our image to verify that it has not been modified.

While Talos does allow a few, highly-controlled write points to the filesystem, we strive to make them as non-unique and non-critical as possible. In fact, we call the writable partition the “ephemeral” partition precisely because we want to make sure none of us ever uses it for unique, non-replicated, non-recreatable data. Thus, if all else fails, we can always wipe the disk and get back up and running.

Minimal

We are always trying to reduce and keep small Talos’ footprint. Because nearly the entire OS is built from scratch in Go, we are already starting out in a good position. We have no shell. We have no SSH. We have none of the GNU utilities, not even a rollup tool such as busybox. Everything which is included in Talos is there because it is necessary, and nothing is included which isn’t.

As a result, the OS right now produces a SquashFS image size of less than 80 MB.

Ephemeral

Everything Talos writes to its disk is either replicated or reconstructable. Since the controlplane is high availability, the loss of any node will cause neither service disruption nor loss of data. No writes are even allowed to the vast majority of the filesystem. We even call the writable partition “ephemeral” to keep this idea always in focus.

Secure

Talos has always been designed with security in mind. With its immutability, its minimalism, its signing, and its componenture, we are able to simply bypass huge classes of vulnerabilities. Moreover, because of the way we have designed Talos, we are able to take advantage of a number of additional settings, such as the recommendations of the Kernel Self Protection Project (kspp) and the complete disablement of dynamic modules.

There are no passwords in Talos. All networked communication is encrypted and key-authenticated. The Talos certificates are short-lived and automatically-rotating. Kubernetes is always constructed with its own separate PKI structure which is enforced.

Declarative

Everything which can be configured in Talos is done so through a single YAML manifest. There is no scripting and no procedural steps. Everything is defined by the one declarative YAML file. This configuration includes that of both Talos itself and the Kubernetes which it forms.

This is achievable because Talos is tightly focused to do one thing: run kubernetes, in the easiest, most secure, most reliable way it can.

2 - Concepts

Platform

Mode

Endpoint

Node

3 - Architecture

Talos is designed to be atomic in deployment and modular in composition.

It is atomic in the sense that the entirety of Talos is distributed as a single, self-contained image, which is versioned, signed, and immutable.

It is modular in the sense that it is composed of many separate components which have clearly defined gRPC interfaces which facilitate internal flexibility and external operational guarantees.

There are a number of components which comprise Talos. All of the main Talos components communicate with each other by gRPC, through a socket on the local machine. This imposes a clear separation of concerns and ensures that changes over time which affect the interoperation of components are a part of the public git record. The benefit is that each component may be iterated and changed as its needs dictate, so long as the external API is controlled. This is a key component in reducing coupling and maintaining modularity.

4 - Components

In this section we will discuss the various components of which Talos is comprised.

Components

| Component | Description |

|---|---|

| apid | When interacting with Talos, the gRPC API endpoint you’re interact with directly is provided by apid. apid acts as the gateway for all component interactions and forwards the requests to routerd. |

| containerd | An industry-standard container runtime with an emphasis on simplicity, robustness and portability. To learn more see the containerd website. |

| machined | Talos replacement for the traditional Linux init-process. Specially designed to run Kubernetes and does not allow starting arbitrary user services. |

| networkd | Handles all of the host level network configuration. Configuration is defined under the networking key |

| timed | Handles the host time synchronization by acting as a NTP-client. |

| kernel | The Linux kernel included with Talos is configured according to the recommendations outlined in the Kernel Self Protection Project. |

| routerd | Responsible for routing an incoming API request from apid to the appropriate backend (e.g. networkd, machined and timed). |

| trustd | To run and operate a Kubernetes cluster a certain level of trust is required. Based on the concept of a ‘Root of Trust’, trustd is a simple daemon responsible for establishing trust within the system. |

| udevd | Implementation of eudev into machined. eudev is Gentoo’s fork of udev, systemd’s device file manager for the Linux kernel. It manages device nodes in /dev and handles all user space actions when adding or removing devices. To learn more see the Gentoo Wiki. |

apid

When interacting with Talos, the gRPC api endpoint you will interact with directly is apid.

Apid acts as the gateway for all component interactions.

Apid provides a mechanism to route requests to the appropriate destination when running on a control plane node.

We’ll use some examples below to illustrate what apid is doing.

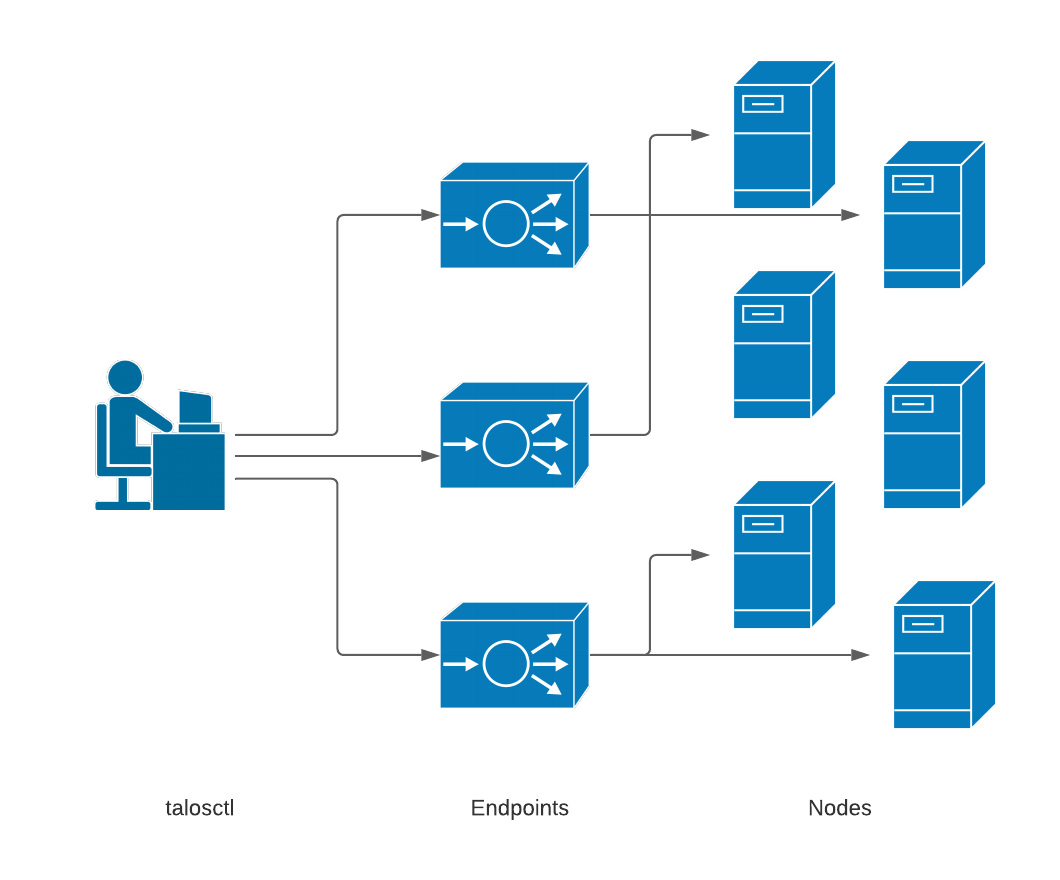

When a user wants to interact with a Talos component via talosctl, there are two flags that control the interaction with apid.

The -e | --endpoints flag is used to denote which Talos node ( via apid ) should handle the connection.

Typically this is a public facing server.

The -n | --nodes flag is used to denote which Talos node(s) should respond to the request.

If --nodes is not specified, the first endpoint will be used.

Note: Typically there will be an

endpointalready defined in the Talos config file. Optionally,nodescan be included here as well.

For example, if a user wants to interact with machined, a command like talosctl -e cluster.talos.dev memory may be used.

$ talosctl -e cluster.talos.dev memory

NODE TOTAL USED FREE SHARED BUFFERS CACHE AVAILABLE

cluster.talos.dev 7938 1768 2390 145 53 3724 6571

In this case, talosctl is interacting with apid running on cluster.talos.dev and forwarding the request to the machined api.

If we wanted to extend our example to retrieve memory from another node in our cluster, we could use the command talosctl -e cluster.talos.dev -n node02 memory.

$ talosctl -e cluster.talos.dev -n node02 memory

NODE TOTAL USED FREE SHARED BUFFERS CACHE AVAILABLE

node02 7938 1768 2390 145 53 3724 6571

The apid instance on cluster.talos.dev receives the request and forwards it to apid running on node02 which forwards the request to the machined api.

We can further extend our example to retrieve memory for all nodes in our cluster by appending additional -n node flags or using a comma separated list of nodes ( -n node01,node02,node03 ):

$ talosctl -e cluster.talos.dev -n node01 -n node02 -n node03 memory

NODE TOTAL USED FREE SHARED BUFFERS CACHE AVAILABLE

node01 7938 871 4071 137 49 2945 7042

node02 257844 14408 190796 18138 49 52589 227492

node03 257844 1830 255186 125 49 777 254556

The apid instance on cluster.talos.dev receives the request and forwards is to node01, node02, and node03 which then forwards the request to their local machined api.

containerd

Containerd provides the container runtime to launch workloads on Talos as well as Kubernetes.

Talos services are namespaced under the system namespace in containerd whereas the Kubernetes services are namespaced under the k8s.io namespace.

machined

A common theme throughout the design of Talos is minimalism.

We believe strongly in the UNIX philosophy that each program should do one job well.

The init included in Talos is one example of this, and we are calling it “machined”.

We wanted to create a focused init that had one job - run Kubernetes.

To that extent, machined is relatively static in that it does not allow for arbitrary user defined services.

Only the services necessary to run Kubernetes and manage the node are available.

This includes:

networkd

Networkd handles all of the host level network configuration.

Configuration is defined under the networking key.

By default, we attempt to issue a DHCP request for every interface on the server. This can be overridden by supplying one of the following kernel arguments:

talos.network.interface.ignore- specify a list of interfaces to skip discovery onip-ip=<client-ip>:<server-ip>:<gw-ip>:<netmask>:<hostname>:<device>:<autoconf>:<dns0-ip>:<dns1-ip>:<ntp0-ip>as documented in the kernel here- ex,

ip=10.0.0.99:::255.0.0.0:control-1:eth0:off:10.0.0.1

- ex,

timed

Timed handles the host time synchronization.

kernel

The Linux kernel included with Talos is configured according to the recommendations outlined in the Kernel Self Protection Project (KSSP).

trustd

Security is one of the highest priorities within Talos. To run a Kubernetes cluster a certain level of trust is required to operate a cluster. For example, orchestrating the bootstrap of a highly available control plane requires the distribution of sensitive PKI data.

To that end, we created trustd.

Based on the concept of a Root of Trust, trustd is a simple daemon responsible for establishing trust within the system.

Once trust is established, various methods become available to the trustee.

It can, for example, accept a write request from another node to place a file on disk.

Additional methods and capability will be added to the trustd component in support of new functionality in the rest of the Talos environment.

udevd

Udevd handles the kernel device notifications and sets up the necessary links in /dev.

5 - Upgrades

Talos

The upgrade process for Talos, like everything else, begins with an API call. This call tells a node the installer image to use to perform the upgrade. Each Talos version corresponds to an installer with the same version, such that the version of the installer is the version of Talos which will be installed.

Because Talos is image based, even at run-time, upgrading Talos is almost exactly the same set of operations as installing Talos, with the difference that the system has already been initialized with a configuration.

An upgrade makes use of an A-B image scheme in order to facilitate rollbacks.

This scheme retains the one previous Talos kernel and OS image following each upgrade.

If an upgrade fails to boot, Talos will roll back to the previous version.

Likewise, Talos may be manually rolled back via API (or talosctl rollback).

This will simply update the boot reference and reboot.

An upgrade can preserve data or not.

If Talos is told to NOT preserve data, it will wipe its ephemeral partition, remove itself from the etcd cluster (if it is a control node), and generally make itself as pristine as is possible.

There are likely to be changes to the default option here over time, so if your setup has a preference to one way or the other, it is better to specify it explicitly, but we try to always be “safe” with this setting.

Sequence

When a Talos node receives the upgrade command, the first thing it does is cordon itself in kubernetes, to avoid receiving any new workload. It then starts to drain away its existing workload.

NOTE: If any of your workloads is sensitive to being shut down ungracefully, be sure to use the lifecycle.preStop Pod spec.

Once all of the workload Pods are drained, Talos will start shutting down its

internal processes.

If it is a control node, this will include etcd.

If preserve is not enabled, Talos will even leave etcd membership.

(Don’t worry about this; we make sure the etcd cluster is healthy and that it will remain healthy after our node departs, before we allow this to occur.)

Once all the processes are stopped and the services are shut down, all of the filesystems will be unmounted. This allows Talos to produce a very clean upgrade, as close as possible to a pristine system. We verify the disk and then perform the actual image upgrade.

Finally, we tell the bootloader to boot once with the new kernel and OS image. Then we reboot.

After the node comes back up and Talos verifies itself, it will make permanent the bootloader change, rejoin the cluster, and finally uncordon itself to receive new workloads.

FAQs

Q. What happens if an upgrade fails?

A. There are many potential ways an upgrade can fail, but we always try to do the safe thing.

The most common first failure is an invalid installer image reference. In this case, Talos will fail to download the upgraded image and will abort the upgrade.

Sometimes, Talos is unable to successfully kill off all of the disk access points, in which case it cannot safely unmount all filesystems to effect the upgrade. In this case, it will abort the upgrade and reboot.

It is possible (especially with test builds) that the upgraded Talos system will fail to start. In this case, the node will be rebooted, and the bootloader will automatically use the previous Talos kernel and image, thus effectively aborting the upgrade.

Lastly, it is possible that Talos itself will upgrade successfully, start up, and rejoin the cluster but your workload will fail to run on it, for whatever reason.

This is when you would use the talosctl rollback command to revert back to the previous Talos version.

Q. Can upgrades be scheduled?

A. We provide the Talos Controller Manager to coordinate upgrades of a cluster. Additionally, because the upgrade sequence is API-driven, you can easily tie this in to your own business logic to schedule and coordinate your upgrades.

Q. Can the upgrade process be observed?

A. The Talos Controller Manager does this internally, watching the logs of the node being upgraded, using the streaming log API of Talos.

You can do the same thing using the talosctl logs --follow machined command.

Q. Are worker node upgrades handled differently from control plane node upgrades?

A. Short answer: no.

Long answer: Both node types follow the same set procedure. However, since control plane nodes run additional services, such as etcd, there are some extra steps and checks performed on them. From the user’s standpoint, however, the processes are identical.

There are also additional restrictions on upgrading control plane nodes.

For instance, Talos will refuse to upgrade a control plane node if that upgrade will cause a loss of quorum for etcd.

This can generally be worked around by setting preserve to true.

Q. Will an upgrade try to do the whole cluster at once? Can I break my cluster by upgrading everything?

A. No.

Nothing prevents the user from sending any number of near-simultaneous upgrades to each node of the cluster. While most people would not attempt to do this, it may be the desired behaviour in certain situations.

If, however, multiple control plane nodes are asked to upgrade at the same time, Talos will protect itself by making sure only one control plane node upgrades at any time, through its checking of etcd quorum. A lease is taken out by the winning control plane node, and no other control plane node is allowed to execute the upgrade until the lease is released and the etcd cluster is healthy and will be healthy when the next node performs its upgrade.

Q. Is there an operator or controller which will keep my nodes updated automatically?

A. Yes.

We provide the Talos Controller Manager to perform this maintenance in a simple, controllable fashion.

Kubernetes

Kubernetes upgrades with Talos also start with an API call.

6 - FAQs

How is Talos different from other container optimized Linux distros?

Talos shares a lot of attributes with other distros, but there are some important differences. Talos integrates tightly with Kubernetes, and is not meant to be a general-purpose operating system. The most important difference is that Talos is fully controlled by an API via a gRPC interface, instead of an ordinary shell. We don’t ship SSH, and there is no console access. Removing components such as these has allowed us to dramatically reduce the footprint of Talos, and in turn, improve a number of other areas like security, predictability, reliability, and consistency across platforms. It’s a big change from how operating systems have been managed in the past, but we believe that API-driven OSes are the future.

Why no shell or SSH?

Since Talos is fully API-driven, all maintenance and debugging operations should be possible via the OS API.

We would like for Talos users to start thinking about what a “machine” is in the context of a Kubernetes cluster.

That is, that a Kubernetes cluster can be thought of as one massive machine, and the nodes are merely additional, undifferentiated resources.

We don’t want humans to focus on the nodes, but rather on the machine that is the Kubernetes cluster.

Should an issue arise at the node level, talosctl should provide the necessary tooling to assist in the identification, debugging, and remedation of the issue.

However, the API is based on the Principle of Least Privilege, and exposes only a limited set of methods.

We envision Talos being a great place for the application of control theory in order to provide a self-healing platform.

Why the name “Talos”?

Talos was an automaton created by the Greek God of the forge to protect the island of Crete. He would patrol the coast and enforce laws throughout the land. We felt it was a fitting name for a security focused operating system designed to run Kubernetes.

7 - talosctl

The talosctl tool packs a lot of power into a small package.

It acts as a reference implementation for the Talos API, but it also handles a lot of

conveniences for the use of Talos and its clusters.

Video Walkthrough

To see some live examples of talosctl usage, view the following video:

Client Configuration

Talosctl configuration is located in $XDG_CONFIG_HOME/talos/config.yaml if $XDG_CONFIG_HOME is defined.

Otherwise it is in $HOME/.talos/config.

The location can always be overridden by the TALOSCONFIG environment variable or the --talosconfig parameter.

Like kubectl, talosctl uses the concept of configuration contexts, so any number of Talos clusters can be managed with a single configuration file.

Unlike kubectl, it also comes with some intelligent tooling to manage the merging of new contexts into the config.

The default operation is a non-destructive merge, where if a context of the same name already exists in the file, the context to be added is renamed by appending an index number.

You can easily overwrite instead, as well.

See the talosctl config help for more information.

Endpoints and Nodes

The endpoints are the communication endpoints to which the client directly talks.

These can be load balancers, DNS hostnames, a list of IPs, etc.

Further, if multiple endpoints are specified, the client will automatically load

balance and fail over between them.

In general, it is recommended that these point to the set of control plane nodes, either directly or through a reverse proxy or load balancer.

Each endpoint will automatically proxy requests destined to another node through it, so it is not necessary to change the endpoint configuration just because you wish to talk to a different node within the cluster.

Endpoints do, however, need to be members of the same Talos cluster as the target node, because these proxied connections reply on certificate-based authentication.

The node is the target node on which you wish to perform the API call.

While you can configure the target node (or even set of target nodes) inside the ’talosctl’ configuration file, it is often useful to simply and explicitly declare the target node(s) using the -n or --nodes command-line parameter.

Keep in mind, when specifying nodes that their IPs and/or hostnames are as seen by the endpoint servers, not as from the client. This is because all connections are proxied first through the endpoints.

Kubeconfig

The configuration for accessing a Talos Kubernetes cluster is obtained with talosctl.

By default, talosctl will safely merge the cluster into the default kubeconfig.

Like talosctl itself, in the event of a naming conflict, the new context name will be index-appended before insertion.

The --force option can be used to overwrite instead.

You can also specify an alternate path by supplying it as a positional parameter.

Thus, like Talos clusters themselves, talosctl makes it easy to manage any

number of kubernetes clusters from the same workstation.

Commands

Please see the CLI reference for the entire list of commands which are available from talosctl.