This is the multi-page printable view of this section. Click here to print.

Guides

- 1: Adding a proprietary kernel module to Talos Linux

- 2: Advanced Networking

- 3: Air-gapped Environments

- 4: Configuring Ceph with Rook

- 5: Configuring Certificate Authorities

- 6: Configuring Containerd

- 7: Configuring Corporate Proxies

- 8: Configuring Network Connectivity

- 9: Configuring Pull Through Cache

- 10: Configuring the Cluster Endpoint

- 11: Configuring Wireguard Network

- 12: Customizing the Kernel

- 13: Customizing the Root Filesystem

- 14: Deploying Cilium CNI

- 15: Deploying Metrics Server

- 16: Disaster Recovery

- 17: Discovery

- 18: Disk Encryption

- 19: Editing Machine Configuration

- 20: KubeSpan

- 21: Logging

- 22: Managing PKI

- 23: Resetting a Machine

- 24: Role-based access control (RBAC)

- 25: Storage

- 26: Troubleshooting Control Plane

- 27: Upgrading Kubernetes

- 28: Upgrading Talos

- 29: Virtual (shared) IP

1 - Adding a proprietary kernel module to Talos Linux

Patching and building the kernel image

Clone the

pkgsrepository from Github and check out the revision corresponding to your version of Talos Linuxgit clone https://github.com/talos-systems/pkgs pkgs && cd pkgs git checkout v0.8.0Clone the Linux kernel and check out the revision that pkgs uses (this can be found in

kernel/kernel-prepare/pkg.yamland it will be something like the following:https://cdn.kernel.org/pub/linux/kernel/v5.x/linux-x.xx.x.tar.xz)git clone https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git && cd linux git checkout v5.15Your module will need to be converted to be in-tree. The steps for this are different depending on the complexity of the module to port, but generally it would involve moving the module source code into the

driverstree and creating a new Makefile and Kconfig.Stage your changes in Git with

git add -A.Run

git diff --cached --no-prefix > foobar.patchto generate a patch from your changes.Copy this patch to

kernel/kernel/patchesin thepkgsrepo.Add a

patchline in thepreparesegment ofkernel/kernel/pkg.yaml:patch -p0 < /pkg/patches/foobar.patchBuild the kernel image. Make sure you are logged in to

ghcr.iobefore running this command, and you can change or omitPLATFORMdepending on what you want to target.make kernel PLATFORM=linux/amd64 USERNAME=your-username PUSH=trueMake a note of the image name the

makecommand outputs.

Building the installer image

Copy the following into a new

Dockerfile:FROM scratch AS customization COPY --from=ghcr.io/your-username/kernel:<kernel version> /lib/modules /lib/modules FROM ghcr.io/talos-systems/installer:<talos version> COPY --from=ghcr.io/your-username/kernel:<kernel version> /boot/vmlinuz /usr/install/${TARGETARCH}/vmlinuzRun to build and push the installer:

INSTALLER_VERSION=<talos version> IMAGE_NAME="ghcr.io/your-username/talos-installer:$INSTALLER_VERSION" DOCKER_BUILDKIT=0 docker build --build-arg RM="/lib/modules" -t "$IMAGE_NAME" . && docker push "$IMAGE_NAME"

Deploying to your cluster

talosctl upgrade --image ghcr.io/your-username/talos-installer:<talos version> --preserve=true

2 - Advanced Networking

Static Addressing

Static addressing is comprised of specifying addresses, routes ( remember to add your default gateway ), and interface.

Most likely you’ll also want to define the nameservers so you have properly functioning DNS.

machine:

network:

hostname: talos

nameservers:

- 10.0.0.1

interfaces:

- interface: eth0

addresses:

- 10.0.0.201/8

mtu: 8765

routes:

- network: 0.0.0.0/0

gateway: 10.0.0.1

- interface: eth1

ignore: true

time:

servers:

- time.cloudflare.com

Additional Addresses for an Interface

In some environments you may need to set additional addresses on an interface. In the following example, we set two additional addresses on the loopback interface.

machine:

network:

interfaces:

- interface: lo

addresses:

- 192.168.0.21/24

- 10.2.2.2/24

Bonding

The following example shows how to create a bonded interface.

machine:

network:

interfaces:

- interface: bond0

dhcp: true

bond:

mode: 802.3ad

lacpRate: fast

xmitHashPolicy: layer3+4

miimon: 100

updelay: 200

downdelay: 200

interfaces:

- eth0

- eth1

VLANs

To setup vlans on a specific device use an array of VLANs to add. The master device may be configured without addressing by setting dhcp to false.

machine:

network:

interfaces:

- interface: eth0

dhcp: false

vlans:

- vlanId: 100

addresses:

- "192.168.2.10/28"

routes:

- network: 0.0.0.0/0

gateway: 192.168.2.1

3 - Air-gapped Environments

In this guide we will create a Talos cluster running in an air-gapped environment with all the required images being pulled from an internal registry.

We will use the QEMU provisioner available in talosctl to create a local cluster, but the same approach could be used to deploy Talos in bigger air-gapped networks.

Requirements

The follow are requirements for this guide:

- Docker 18.03 or greater

- Requirements for the Talos QEMU cluster

Identifying Images

In air-gapped environments, access to the public Internet is restricted, so Talos can’t pull images from public Docker registries (docker.io, ghcr.io, etc.)

We need to identify the images required to install and run Talos.

The same strategy can be used for images required by custom workloads running on the cluster.

The talosctl images command provides a list of default images used by the Talos cluster (with default configuration

settings).

To print the list of images, run:

talosctl images

This list contains images required by a default deployment of Talos. There might be additional images required for the workloads running on this cluster, and those should be added to this list.

Preparing the Internal Registry

As access to the public registries is restricted, we have to run an internal Docker registry. In this guide, we will launch the registry on the same machine using Docker:

$ docker run -d -p 6000:5000 --restart always --name registry-aigrapped registry:2

1bf09802bee1476bc463d972c686f90a64640d87dacce1ac8485585de69c91a5

This registry will be accepting connections on port 6000 on the host IPs. The registry is empty by default, so we have fill it with the images required by Talos.

First, we pull all the images to our local Docker daemon:

$ for image in `talosctl images`; do docker pull $image; done

v0.12.0-amd64: Pulling from coreos/flannel

Digest: sha256:6d451d92c921f14bfb38196aacb6e506d4593c5b3c9d40a8b8a2506010dc3e10

...

All images are now stored in the Docker daemon store:

$ docker images

ghcr.io/talos-systems/install-cni v0.3.0-12-g90722c3 980d36ee2ee1 5 days ago 79.7MB

k8s.gcr.io/kube-proxy-amd64 v1.20.0 33c60812eab8 2 weeks ago 118MB

...

Now we need to re-tag them so that we can push them to our local registry.

We are going to replace the first component of the image name (before the first slash) with our registry endpoint 127.0.0.1:6000:

$ for image in `talosctl images`; do \

docker tag $image `echo $image | sed -E 's#^[^/]+/#127.0.0.1:6000/#'` \

done

As the next step, we push images to the internal registry:

$ for image in `talosctl images`; do \

docker push `echo $image | sed -E 's#^[^/]+/#127.0.0.1:6000/#'` \

done

We can now verify that the images are pushed to the registry:

$ curl http://127.0.0.1:6000/v2/_catalog

{"repositories":["autonomy/kubelet","coredns","coreos/flannel","etcd-development/etcd","kube-apiserver-amd64","kube-controller-manager-amd64","kube-proxy-amd64","kube-scheduler-amd64","talos-systems/install-cni","talos-systems/installer"]}

Note: images in the registry don’t have the registry endpoint prefix anymore.

Launching Talos in an Air-gapped Environment

For Talos to use the internal registry, we use the registry mirror feature to redirect all the image pull requests to the internal registry. This means that the registry endpoint (as the first component of the image reference) gets ignored, and all pull requests are sent directly to the specified endpoint.

We are going to use a QEMU-based Talos cluster for this guide, but the same approach works with Docker-based clusters as well. As QEMU-based clusters go through the Talos install process, they can be used better to model a real air-gapped environment.

The talosctl cluster create command provides conveniences for common configuration options.

The only required flag for this guide is --registry-mirror '*'=http://10.5.0.1:6000 which redirects every pull request to the internal registry.

The endpoint being used is 10.5.0.1, as this is the default bridge interface address which will be routable from the QEMU VMs (127.0.0.1 IP will be pointing to the VM itself).

$ sudo -E talosctl cluster create --provisioner=qemu --registry-mirror '*'=http://10.5.0.1:6000 --install-image=ghcr.io/talos-systems/installer:v0.14.0

validating CIDR and reserving IPs

generating PKI and tokens

creating state directory in "/home/smira/.talos/clusters/talos-default"

creating network talos-default

creating load balancer

creating dhcpd

creating master nodes

creating worker nodes

waiting for API

...

Note:

--install-imageshould match the image which was copied into the internal registry in the previous step.

You can be verify that the cluster is air-gapped by inspecting the registry logs: docker logs -f registry-airgapped.

Closing Notes

Running in an air-gapped environment might require additional configuration changes, for example using custom settings for DNS and NTP servers.

When scaling this guide to the bare-metal environment, following Talos config snippet could be used as an equivalent of the --registry-mirror flag above:

machine:

...

registries:

mirrors:

'*':

endpoints:

- http://10.5.0.1:6000/

...

Other implementations of Docker registry can be used in place of the Docker registry image used above to run the registry.

If required, auth can be configured for the internal registry (and custom TLS certificates if needed).

4 - Configuring Ceph with Rook

Preparation

Talos Linux reserves an entire disk for the OS installation, so machines with multiple available disks are needed for a reliable Ceph cluster with Rook and Talos Linux.

Rook requires that the block devices or partitions used by Ceph have no partitions or formatted filesystems before use.

Rook also requires a minimum Kubernetes version of v1.16 and Helm v3.0 for installation of charts.

It is highly recommended that the Rook Ceph overview is read and understood before deploying a Ceph cluster with Rook.

Installation

Creating a Ceph cluster with Rook requires two steps; first the Rook Operator needs to be installed which can be done with a Helm Chart.

The example below installs the Rook Operator into the rook-ceph namespace, which is the default for a Ceph cluster with Rook.

$ helm repo add rook-release https://charts.rook.io/release

"rook-release" has been added to your repositories

$ helm install --create-namespace --namespace rook-ceph rook-ceph rook-release/rook-ceph

W0327 17:52:44.277830 54987 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0327 17:52:44.612243 54987 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

NAME: rook-ceph

LAST DEPLOYED: Sun Mar 27 17:52:42 2022

NAMESPACE: rook-ceph

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Rook Operator has been installed. Check its status by running:

kubectl --namespace rook-ceph get pods -l "app=rook-ceph-operator"

Visit https://rook.io/docs/rook/latest for instructions on how to create and configure Rook clusters

Important Notes:

- You must customize the 'CephCluster' resource in the sample manifests for your cluster.

- Each CephCluster must be deployed to its own namespace, the samples use `rook-ceph` for the namespace.

- The sample manifests assume you also installed the rook-ceph operator in the `rook-ceph` namespace.

- The helm chart includes all the RBAC required to create a CephCluster CRD in the same namespace.

- Any disk devices you add to the cluster in the 'CephCluster' must be empty (no filesystem and no partitions).

Once that is complete, the Ceph cluster can be installed with the official Helm Chart. The Chart can be installed with default values, which will attempt to use all nodes in the Kubernetes cluster, and all unused disks on each node for Ceph storage, and make available block storage, object storage, as well as a shared filesystem. Generally more specific node/device/cluster configuration is used, and the Rook documentation explains all the available options in detail. For this example the defaults will be adequate.

$ helm install --create-namespace --namespace rook-ceph rook-ceph-cluster --set operatorNamespace=rook-ceph rook-release/rook-ceph-cluster

NAME: rook-ceph-cluster

LAST DEPLOYED: Sun Mar 27 18:12:46 2022

NAMESPACE: rook-ceph

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Ceph Cluster has been installed. Check its status by running:

kubectl --namespace rook-ceph get cephcluster

Visit https://rook.github.io/docs/rook/latest/ceph-cluster-crd.html for more information about the Ceph CRD.

Important Notes:

- You can only deploy a single cluster per namespace

- If you wish to delete this cluster and start fresh, you will also have to wipe the OSD disks using `sfdisk`

Now the Ceph cluster configuration has been created, the Rook operator needs time to install the Ceph cluster and bring all the components online. The progression of the Ceph cluster state can be followed with the following command.

$ watch kubectl --namespace rook-ceph get cephcluster rook-ceph

Every 2.0s: kubectl --namespace rook-ceph get cephcluster rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL

rook-ceph /var/lib/rook 3 57s Progressing Configuring Ceph Mons

Depending on the size of the Ceph cluster and the availability of resources the Ceph cluster should become available, and with it the storage classes that can be used with Kubernetes Physical Volumes.

$ kubectl --namespace rook-ceph get cephcluster rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL

rook-ceph /var/lib/rook 3 40m Ready Cluster created successfully HEALTH_OK

$ kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 77m

ceph-bucket rook-ceph.ceph.rook.io/bucket Delete Immediate false 77m

ceph-filesystem rook-ceph.cephfs.csi.ceph.com Delete Immediate true 77m

Talos Linux Considerations

It is important to note that a Rook Ceph cluster saves cluster information directly onto the node (be default dataDirHostPath is set to /var/lib/rook) which under Talos Linux is ephemeral.

This makes cluster management a little bit more involved, as any time a Talos Linux node is reconfigured or upgraded, the ephemeral partition is wiped.

When performing maintenance on a Talos Linux node with a Rook Ceph cluster, it is imperative that care be taken to maintain the health of the Ceph cluster, for instance when upgrading the Talos Linux version. Before upgrading, you should always check the health status of the Ceph cluster to ensure that it is healthy.

$ kubectl --namespace rook-ceph get cephclusters.ceph.rook.io rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL

rook-ceph /var/lib/rook 3 98m Ready Cluster created successfully HEALTH_OK

If it is, you can begin the upgrade process for the Talos Linux node, during which time the Ceph cluster will become unhealthy as the node is reconfigured. Before performing any other action on the Talos Linux nodes, the Ceph cluster must return to a healthy status.

$ talosctl upgrade --nodes 172.20.15.5 --image ghcr.io/talos-systems/installer:v0.14.3

NODE ACK STARTED

172.20.15.5 Upgrade request received 2022-03-27 20:29:55.292432887 +0200 CEST m=+10.050399758

$ kubectl --namespace rook-ceph get cephclusters.ceph.rook.io

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL

rook-ceph /var/lib/rook 3 99m Progressing Configuring Ceph Mgr(s) HEALTH_WARN

$ kubectl --namespace rook-ceph wait --timeout=1800s --for=jsonpath='{.status.ceph.health}=HEALTH_OK' rook-ceph

cephcluster.ceph.rook.io/rook-ceph condition met

The above steps need to be performed for each Talos Linux node undergoing maintenance, one at a time.

Cleaning Up

Rook Ceph Cluster Removal

Removing a Rook Ceph cluster requires a few steps, starting with signalling to Rook that the Ceph cluster is really being destroyed. Then all Persistent Volumes (and Claims) backed by the Ceph cluster must be deleted, followed by the Storage Classes and the Ceph storage types.

$ kubectl --namespace rook-ceph patch cephcluster rook-ceph --type merge -p '{"spec":{"cleanupPolicy":{"confirmation":"yes-really-destroy-data"}}}'

cephcluster.ceph.rook.io/rook-ceph patched

$ kubectl delete storageclasses ceph-block ceph-bucket ceph-filesystem

storageclass.storage.k8s.io "ceph-block" deleted

storageclass.storage.k8s.io "ceph-bucket" deleted

storageclass.storage.k8s.io "ceph-filesystem" deleted

$ kubectl --namespace rook-ceph delete cephblockpools ceph-blockpool

cephblockpool.ceph.rook.io "ceph-blockpool" deleted

$ kubectl --namespace rook-ceph delete cephobjectstore ceph-objectstore

cephobjectstore.ceph.rook.io "ceph-objectstore" deleted

$ kubectl --namespace rook-ceph delete cephfilesystem ceph-filesystem

cephfilesystem.ceph.rook.io "ceph-filesystem" deleted

Once that is complete, the Ceph cluster itself can be removed, along with the Rook Ceph cluster Helm chart installation.

$ kubectl --namespace rook-ceph delete cephcluster rook-ceph

cephcluster.ceph.rook.io "rook-ceph" deleted

$ helm --namespace rook-ceph uninstall rook-ceph-cluster

release "rook-ceph-cluster" uninstalled

If needed, the Rook Operator can also be removed along with all the Custom Resource Definitions that it created.

$ helm --namespace rook-ceph uninstall rook-ceph

W0328 12:41:14.998307 147203 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

These resources were kept due to the resource policy:

[CustomResourceDefinition] cephblockpools.ceph.rook.io

[CustomResourceDefinition] cephbucketnotifications.ceph.rook.io

[CustomResourceDefinition] cephbuckettopics.ceph.rook.io

[CustomResourceDefinition] cephclients.ceph.rook.io

[CustomResourceDefinition] cephclusters.ceph.rook.io

[CustomResourceDefinition] cephfilesystemmirrors.ceph.rook.io

[CustomResourceDefinition] cephfilesystems.ceph.rook.io

[CustomResourceDefinition] cephfilesystemsubvolumegroups.ceph.rook.io

[CustomResourceDefinition] cephnfses.ceph.rook.io

[CustomResourceDefinition] cephobjectrealms.ceph.rook.io

[CustomResourceDefinition] cephobjectstores.ceph.rook.io

[CustomResourceDefinition] cephobjectstoreusers.ceph.rook.io

[CustomResourceDefinition] cephobjectzonegroups.ceph.rook.io

[CustomResourceDefinition] cephobjectzones.ceph.rook.io

[CustomResourceDefinition] cephrbdmirrors.ceph.rook.io

[CustomResourceDefinition] objectbucketclaims.objectbucket.io

[CustomResourceDefinition] objectbuckets.objectbucket.io

release "rook-ceph" uninstalled

$ kubectl delete crds cephblockpools.ceph.rook.io cephbucketnotifications.ceph.rook.io cephbuckettopics.ceph.rook.io \

cephclients.ceph.rook.io cephclusters.ceph.rook.io cephfilesystemmirrors.ceph.rook.io \

cephfilesystems.ceph.rook.io cephfilesystemsubvolumegroups.ceph.rook.io \

cephnfses.ceph.rook.io cephobjectrealms.ceph.rook.io cephobjectstores.ceph.rook.io \

cephobjectstoreusers.ceph.rook.io cephobjectzonegroups.ceph.rook.io cephobjectzones.ceph.rook.io \

cephrbdmirrors.ceph.rook.io objectbucketclaims.objectbucket.io objectbuckets.objectbucket.io

customresourcedefinition.apiextensions.k8s.io "cephblockpools.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephbucketnotifications.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephbuckettopics.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephclients.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephclusters.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephfilesystemmirrors.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephfilesystems.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephfilesystemsubvolumegroups.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephnfses.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephobjectrealms.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephobjectstores.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephobjectstoreusers.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephobjectzonegroups.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephobjectzones.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "cephrbdmirrors.ceph.rook.io" deleted

customresourcedefinition.apiextensions.k8s.io "objectbucketclaims.objectbucket.io" deleted

customresourcedefinition.apiextensions.k8s.io "objectbuckets.objectbucket.io" deleted

Talos Linux Rook Metadata Removal

If the Rook Operator is cleanly removed following the above process, the node metadata and disks should be clean and ready to be re-used.

In the case of an unclean cluster removal, there may be still a few instances of metadata stored on the system disk, as well as the partition information on the storage disks.

First the node metadata needs to be removed, make sure to update the nodeName with the actual name of a storage node that needs cleaning, and path with the Rook configuration dataDirHostPath set when installing the chart.

The following will need to be repeated for each node used in the Rook Ceph cluster.

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: disk-clean

spec:

restartPolicy: Never

nodeName: <storage-node-name>

volumes:

- name: rook-data-dir

hostPath:

path: <dataDirHostPath>

containers:

- name: disk-clean

image: busybox

securityContext:

privileged: true

volumeMounts:

- name: rook-data-dir

mountPath: /node/rook-data

command: ["/bin/sh", "-c", "rm -rf /node/rook-data/*"]

EOF

pod/disk-clean created

$ kubectl wait --timeout=900s --for=jsonpath='{.status.phase}=Succeeded' pod disk-clean

pod/disk-clean condition met

$ kubectl delete pod disk-clean

pod "disk-clean" deleted

Lastly, the disks themselves need the partition and filesystem data wiped before they can be reused.

Again, the following as to be repeated for each node and disk used in the Rook Ceph cluster, updating nodeName and of= in the command as needed.

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: disk-wipe

spec:

restartPolicy: Never

nodeName: <storage-node-name>

containers:

- name: disk-wipe

image: busybox

securityContext:

privileged: true

command: ["/bin/sh", "-c", "dd if=/dev/zero bs=1M count=100 oflag=direct of=<device>"]

EOF

pod/disk-wipe created

$ kubectl wait --timeout=900s --for=jsonpath='{.status.phase}=Succeeded' pod disk-wipe

pod/disk-wipe condition met

$ kubectl delete pod disk-clean

pod "disk-wipe" deleted

5 - Configuring Certificate Authorities

Appending the Certificate Authority

Put into each machine the PEM encoded certificate:

machine:

...

files:

- content: |

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

permissions: 0644

path: /etc/ssl/certs/ca-certificates

op: append

6 - Configuring Containerd

The base containerd configuration expects to merge in any additional configs present in /var/cri/conf.d/*.toml.

An example of exposing metrics

Into each machine config, add the following:

machine:

...

files:

- content: |

[metrics]

address = "0.0.0.0:11234"

path: /var/cri/conf.d/metrics.toml

op: create

Create cluster like normal and see that metrics are now present on this port:

$ curl 127.0.0.1:11234/v1/metrics

# HELP container_blkio_io_service_bytes_recursive_bytes The blkio io service bytes recursive

# TYPE container_blkio_io_service_bytes_recursive_bytes gauge

container_blkio_io_service_bytes_recursive_bytes{container_id="0677d73196f5f4be1d408aab1c4125cf9e6c458a4bea39e590ac779709ffbe14",device="/dev/dm-0",major="253",minor="0",namespace="k8s.io",op="Async"} 0

container_blkio_io_service_bytes_recursive_bytes{container_id="0677d73196f5f4be1d408aab1c4125cf9e6c458a4bea39e590ac779709ffbe14",device="/dev/dm-0",major="253",minor="0",namespace="k8s.io",op="Discard"} 0

...

...

7 - Configuring Corporate Proxies

Appending the Certificate Authority of MITM Proxies

Put into each machine the PEM encoded certificate:

machine:

...

files:

- content: |

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

permissions: 0644

path: /etc/ssl/certs/ca-certificates

op: append

Configuring a Machine to Use the Proxy

To make use of a proxy:

machine:

env:

http_proxy: <http proxy>

https_proxy: <https proxy>

no_proxy: <no proxy>

Additionally, configure the DNS nameservers, and NTP servers:

machine:

env:

...

time:

servers:

- <server 1>

- <server ...>

- <server n>

...

network:

nameservers:

- <ip 1>

- <ip ...>

- <ip n>

8 - Configuring Network Connectivity

Configuring Network Connectivity

The simplest way to deploy Talos is by ensuring that all the remote components of the system (talosctl, the control plane nodes, and worker nodes) all have layer 2 connectivity.

This is not always possible, however, so this page lays out the minimal network access that is required to configure and operate a talos cluster.

Note: These are the ports required for Talos specifically, and should be configured in addition to the ports required by kubernetes. See the kubernetes docs for information on the ports used by kubernetes itself.

Control plane node(s)

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 50000* | apid | talosctl |

| TCP | Inbound | 50001* | trustd | Control plane nodes, worker nodes |

Ports marked with a

*are not currently configurable, but that may change in the future. Follow along here.

Worker node(s)

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 50001* | trustd | Control plane nodes |

Ports marked with a

*are not currently configurable, but that may change in the future. Follow along here.

9 - Configuring Pull Through Cache

In this guide we will create a set of local caching Docker registry proxies to minimize local cluster startup time.

When running Talos locally, pulling images from Docker registries might take a significant amount of time. We spin up local caching pass-through registries to cache images and configure a local Talos cluster to use those proxies. A similar approach might be used to run Talos in production in air-gapped environments. It can be also used to verify that all the images are available in local registries.

Video Walkthrough

To see a live demo of this writeup, see the video below:

Requirements

The follow are requirements for creating the set of caching proxies:

Launch the Caching Docker Registry Proxies

Talos pulls from docker.io, k8s.gcr.io, quay.io, gcr.io, and ghcr.io by default.

If your configuration is different, you might need to modify the commands below:

docker run -d -p 5000:5000 \

-e REGISTRY_PROXY_REMOTEURL=https://registry-1.docker.io \

--restart always \

--name registry-docker.io registry:2

docker run -d -p 5001:5000 \

-e REGISTRY_PROXY_REMOTEURL=https://k8s.gcr.io \

--restart always \

--name registry-k8s.gcr.io registry:2

docker run -d -p 5002:5000 \

-e REGISTRY_PROXY_REMOTEURL=https://quay.io \

--restart always \

--name registry-quay.io registry:2.5

docker run -d -p 5003:5000 \

-e REGISTRY_PROXY_REMOTEURL=https://gcr.io \

--restart always \

--name registry-gcr.io registry:2

docker run -d -p 5004:5000 \

-e REGISTRY_PROXY_REMOTEURL=https://ghcr.io \

--restart always \

--name registry-ghcr.io registry:2

Note: Proxies are started as docker containers, and they’re automatically configured to start with Docker daemon. Please note that

quay.ioproxy doesn’t support recent Docker image schema, so we run older registry image version (2.5).

As a registry container can only handle a single upstream Docker registry, we launch a container per upstream, each on its own host port (5000, 5001, 5002, 5003 and 5004).

Using Caching Registries with QEMU Local Cluster

With a QEMU local cluster, a bridge interface is created on the host. As registry containers expose their ports on the host, we can use bridge IP to direct proxy requests.

sudo talosctl cluster create --provisioner qemu \

--registry-mirror docker.io=http://10.5.0.1:5000 \

--registry-mirror k8s.gcr.io=http://10.5.0.1:5001 \

--registry-mirror quay.io=http://10.5.0.1:5002 \

--registry-mirror gcr.io=http://10.5.0.1:5003 \

--registry-mirror ghcr.io=http://10.5.0.1:5004

The Talos local cluster should now start pulling via caching registries.

This can be verified via registry logs, e.g. docker logs -f registry-docker.io.

The first time cluster boots, images are pulled and cached, so next cluster boot should be much faster.

Note:

10.5.0.1is a bridge IP with default network (10.5.0.0/24), if using custom--cidr, value should be adjusted accordingly.

Using Caching Registries with docker Local Cluster

With a docker local cluster we can use docker bridge IP, default value for that IP is 172.17.0.1.

On Linux, the docker bridge address can be inspected with ip addr show docker0.

talosctl cluster create --provisioner docker \

--registry-mirror docker.io=http://172.17.0.1:5000 \

--registry-mirror k8s.gcr.io=http://172.17.0.1:5001 \

--registry-mirror quay.io=http://172.17.0.1:5002 \

--registry-mirror gcr.io=http://172.17.0.1:5003 \

--registry-mirror ghcr.io=http://172.17.0.1:5004

Cleaning Up

To cleanup, run:

docker rm -f registry-docker.io

docker rm -f registry-k8s.gcr.io

docker rm -f registry-quay.io

docker rm -f registry-gcr.io

docker rm -f registry-ghcr.io

Note: Removing docker registry containers also removes the image cache. So if you plan to use caching registries, keep the containers running.

10 - Configuring the Cluster Endpoint

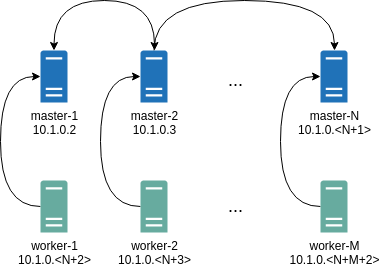

In this section, we will step through the configuration of a Talos based Kubernetes cluster. There are three major components we will configure:

apidandtalosctl- the master nodes

- the worker nodes

Talos enforces a high level of security by using mutual TLS for authentication and authorization.

We recommend that the configuration of Talos be performed by a cluster owner. A cluster owner should be a person of authority within an organization, perhaps a director, manager, or senior member of a team. They are responsible for storing the root CA, and distributing the PKI for authorized cluster administrators.

Recommended settings

Talos runs great out of the box, but if you tweak some minor settings it will make your life a lot easier in the future. This is not a requirement, but rather a document to explain some key settings.

Endpoint

To configure the talosctl endpoint, it is recommended you use a resolvable DNS name.

This way, if you decide to upgrade to a multi-controlplane cluster you only have to add the ip address to the hostname configuration.

The configuration can either be done on a Loadbalancer, or simply trough DNS.

For example:

This is in the config file for the cluster e.g. controlplane.yaml and worker.yaml. for more details, please see: v1alpha1 endpoint configuration

.....

cluster:

controlPlane:

endpoint: https://endpoint.example.local:6443

.....

If you have a DNS name as the endpoint, you can upgrade your talos cluster with multiple controlplanes in the future (if you don’t have a multi-controlplane setup from the start) Using a DNS name generates the corresponding Certificates (Kubernetes and Talos) for the correct hostname.

11 - Configuring Wireguard Network

Configuring Wireguard Network

Quick Start

The quickest way to try out Wireguard is to use talosctl cluster create command:

talosctl cluster create --wireguard-cidr 10.1.0.0/24

It will automatically generate Wireguard network configuration for each node with the following network topology:

Where all controlplane nodes will be used as Wireguard servers which listen on port 51111.

All controlplanes and workers will connect to all controlplanes.

It also sets PersistentKeepalive to 5 seconds to establish controlplanes to workers connection.

After the cluster is deployed it should be possible to verify Wireguard network connectivity.

It is possible to deploy a container with hostNetwork enabled, then do kubectl exec <container> /bin/bash and either do:

ping 10.1.0.2

Or install wireguard-tools package and run:

wg show

Wireguard show should output something like this:

interface: wg0

public key: OMhgEvNIaEN7zeCLijRh4c+0Hwh3erjknzdyvVlrkGM=

private key: (hidden)

listening port: 47946

peer: 1EsxUygZo8/URWs18tqB5FW2cLVlaTA+lUisKIf8nh4=

endpoint: 10.5.0.2:51111

allowed ips: 10.1.0.0/24

latest handshake: 1 minute, 55 seconds ago

transfer: 3.17 KiB received, 3.55 KiB sent

persistent keepalive: every 5 seconds

It is also possible to use generated configuration as a reference by pulling generated config files using:

talosctl read -n 10.5.0.2 /system/state/config.yaml > controlplane.yaml

talosctl read -n 10.5.0.3 /system/state/config.yaml > worker.yaml

Manual Configuration

All Wireguard configuration can be done by changing Talos machine config files. As an example we will use this official Wireguard quick start tutorial.

Key Generation

This part is exactly the same:

wg genkey | tee privatekey | wg pubkey > publickey

Setting up Device

Inline comments show relations between configs and wg quickstart tutorial commands:

...

network:

interfaces:

...

# ip link add dev wg0 type wireguard

- interface: wg0

mtu: 1500

# ip address add dev wg0 192.168.2.1/24

addresses:

- 192.168.2.1/24

# wg set wg0 listen-port 51820 private-key /path/to/private-key peer ABCDEF... allowed-ips 192.168.88.0/24 endpoint 209.202.254.14:8172

wireguard:

privateKey: <privatekey file contents>

listenPort: 51820

peers:

allowedIPs:

- 192.168.88.0/24

endpoint: 209.202.254.14.8172

publicKey: ABCDEF...

...

When networkd gets this configuration it will create the device, configure it and will bring it up (equivalent to ip link set up dev wg0).

All supported config parameters are described in the Machine Config Reference.

12 - Customizing the Kernel

The installer image contains ONBUILD instructions that handle the following:

- the decompression, and unpacking of the

initramfs.xz - the unsquashing of the rootfs

- the copying of new rootfs files

- the squashing of the new rootfs

- and the packing, and compression of the new

initramfs.xz

When used as a base image, the installer will perform the above steps automatically with the requirement that a customization stage be defined in the Dockerfile.

Build and push your own kernel:

git clone https://github.com/talos-systems/pkgs.git

cd pkgs

make kernel-menuconfig USERNAME=_your_github_user_name_

docker login ghcr.io --username _your_github_user_name_

make kernel USERNAME=_your_github_user_name_ PUSH=true

Using a multi-stage Dockerfile we can define the customization stage and build FROM the installer image:

FROM scratch AS customization

COPY --from=<custom kernel image> /lib/modules /lib/modules

FROM ghcr.io/talos-systems/installer:latest

COPY --from=<custom kernel image> /boot/vmlinuz /usr/install/${TARGETARCH}/vmlinuz

When building the image, the customization stage will automatically be copied into the rootfs.

The customization stage is not limited to a single COPY instruction.

In fact, you can do whatever you would like in this stage, but keep in mind that everything in / will be copied into the rootfs.

To build the image, run:

DOCKER_BUILDKIT=0 docker build --build-arg RM="/lib/modules" -t installer:kernel .

Note: buildkit has a bug #816, to disable it use

DOCKER_BUILDKIT=0

Now that we have a custom installer we can build Talos for the specific platform we wish to deploy to.

13 - Customizing the Root Filesystem

The installer image contains ONBUILD instructions that handle the following:

- the decompression, and unpacking of the

initramfs.xz - the unsquashing of the rootfs

- the copying of new rootfs files

- the squashing of the new rootfs

- and the packing, and compression of the new

initramfs.xz

When used as a base image, the installer will perform the above steps automatically with the requirement that a customization stage be defined in the Dockerfile.

For example, say we have an image that contains the contents of a library we wish to add to the Talos rootfs.

We need to define a stage with the name customization:

FROM scratch AS customization

COPY --from=<name|index> <src> <dest>

Using a multi-stage Dockerfile we can define the customization stage and build FROM the installer image:

FROM scratch AS customization

COPY --from=<name|index> <src> <dest>

FROM ghcr.io/talos-systems/installer:latest

When building the image, the customization stage will automatically be copied into the rootfs.

The customization stage is not limited to a single COPY instruction.

In fact, you can do whatever you would like in this stage, but keep in mind that everything in / will be copied into the rootfs.

Note:

<dest>is the path relative to the rootfs that you wish to place the contents of<src>.

To build the image, run:

docker build --squash -t <organization>/installer:latest .

In the case that you need to perform some cleanup before adding additional files to the rootfs, you can specify the RM build-time variable:

docker build --squash --build-arg RM="[<path> ...]" -t <organization>/installer:latest .

This will perform a rm -rf on the specified paths relative to the rootfs.

Note:

RMmust be a whitespace delimited list.

The resulting image can be used to:

- generate an image for any of the supported providers

- perform bare-metall installs

- perform upgrades

We will step through common customizations in the remainder of this section.

14 - Deploying Cilium CNI

From v1.9 onwards Cilium does no longer provide a one-liner install manifest that can be used to install Cilium on a node via kubectl apply -f or passing it in as an extra url in the urls part in the Talos machine configuration.

Installing Cilium the new way via the

ciliumcli is broken, so we’ll be usinghelmto install Cilium. For more information: Install with CLI fails, works with Helm

Refer to Installing with Helm for more information.

First we’ll need to add the helm repo for Cilium.

helm repo add cilium https://helm.cilium.io/

helm repo update

This documentation will outline installing Cilium CNI v1.11.2 on Talos in four different ways. Adhering to Talos principles we’ll deploy Cilium with IPAM mode set to Kubernetes. Each method can either install Cilium using kube proxy (default) or without: Kubernetes Without kube-proxy

Machine config preparation

When generating the machine config for a node set the CNI to none. For example using a config patch:

talosctl gen config \

my-cluster https://mycluster.local:6443 \

--config-patch '[{"op":"add", "path": "/cluster/network/cni", "value": {"name": "none"}}]'

Or if you want to deploy Cilium in strict mode without kube-proxy, you also need to disable kube proxy:

talosctl gen config \

my-cluster https://mycluster.local:6443 \

--config-patch '[{"op": "add", "path": "/cluster/proxy", "value": {"disabled": true}}, {"op":"add", "path": "/cluster/network/cni", "value": {"name": "none"}}]'

Method 1: Helm install

After applying the machine config and bootstrapping Talos will appear to hang on phase 18/19 with the message: retrying error: node not ready. This happens because nodes in Kubernetes are only marked as ready once the CNI is up. As there is no CNI defined, the boot process is pending and will reboot the node to retry after 10 minutes, this is expected behavior.

During this window you can install Cilium manually by running the following:

helm install cilium cilium/cilium \

--version 1.11.2 \

--namespace kube-system \

--set ipam.mode=kubernetes

Or if you want to deploy Cilium in strict mode without kube-proxy, also set some extra paramaters:

export KUBERNETES_API_SERVER_ADDRESS=<>

export KUBERNETES_API_SERVER_PORT=6443

helm install cilium cilium/cilium \

--version 1.11.2 \

--namespace kube-system \

--set ipam.mode=kubernetes \

--set kubeProxyReplacement=strict \

--set k8sServiceHost="${KUBERNETES_API_SERVER_ADDRESS}" \

--set k8sServicePort="${KUBERNETES_API_SERVER_PORT}"

After Cilium is installed the boot process should continue and complete successfully.

Method 2: Helm manifests install

Instead of directly installing Cilium you can instead first generate the manifest and then apply it:

helm template cilium cilium/cilium \

--version 1.11.2 \

--namespace kube-system

--set ipam.mode=kubernetes > cilium.yaml

kubectl apply -f cilium.yaml

Without kube-proxy:

export KUBERNETES_API_SERVER_ADDRESS=<>

export KUBERNETES_API_SERVER_PORT=6443

helm template cilium cilium/cilium \

--version 1.11.2 \

--namespace kube-system \

--set ipam.mode=kubernetes \

--set kubeProxyReplacement=strict \

--set k8sServiceHost="${KUBERNETES_API_SERVER_ADDRESS}" \

--set k8sServicePort="${KUBERNETES_API_SERVER_PORT}" > cilium.yaml

kubectl apply -f cilium.yaml

Method 3: Helm manifests hosted install

After generating cilium.yaml using helm template, instead of applying this manifest directly during the Talos boot window (before the reboot timeout).

You can also host this file somewhere and patch the machine config to apply this manifest automatically during bootstrap.

To do this patch your machine configuration to include this config instead of the above:

talosctl gen config \

my-cluster https://mycluster.local:6443 \

--config-patch '[{"op":"add", "path": "/cluster/network/cni", "value": {"name": "custom", "urls": ["https://server.yourdomain.tld/some/path/cilium.yaml"]}}]'

Resulting in a config that look like this:

name: custom # Name of CNI to use.

# URLs containing manifests to apply for the CNI.

urls:

- https://server.yourdomain.tld/some/path/cilium.yaml

However, beware of the fact that the helm generated Cilium manifest contains sensitive key material. As such you should definitely not host this somewhere publicly accessible.

Method 4: Helm manifests inline install

A more secure option would be to include the helm template output manifest inside the machine configuration.

The machine config should be generated with CNI set to none

talosctl gen config \

my-cluster https://mycluster.local:6443 \

--config-patch '[{"op":"add", "path": "/cluster/network/cni", "value": {"name": "none"}}]'

if deploying Cilium with kube-proxy disabled, you can also include the following:

talosctl gen config \

my-cluster https://mycluster.local:6443 \

--config-patch '[{"op": "add", "path": "/cluster/proxy", "value": {"disabled": true}}, {"op":"add", "path": "/cluster/network/cni", "value": {"name": "none"}}]'

To do so patch this into your machine configuration:

inlineManifests:

- name: cilium

contents: |

--

# Source: cilium/templates/cilium-agent/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: "cilium"

namespace: kube-system

---

# Source: cilium/templates/cilium-operator/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

-> Your cilium.yaml file will be pretty long....

This will install the Cilium manifests at just the right time during bootstrap.

Beware though:

- Changing the namespace when templating with Helm does not generate a manifest containing the yaml to create that namespace. As the inline manifest is processed from top to bottom make sure to manually put the namespace yaml at the start of the inline manifest.

- Only add the Cilium inline manifest to the control plane nodes machine configuration.

- Make sure all control plane nodes have an identical configuration.

- If you delete any of the generated resources they will be restored whenever a control plane node reboots.

- As a safety measure Talos only creates missing resources from inline manifests, it never deletes or updates anything.

- If you need to update a manifest make sure to first edit all control plane machine configurations and then run

talosctl upgrade-k8sas it will take care of updating inline manifests.

Known issues

Currently there is an interaction between a Kubespan enabled Talos cluster and Cilium that results in the cluster going down during bootstrap after applying the Cilium manifests. For more details: Kubespan and Cilium compatiblity: etcd is failing

When running Cilium with a kube-proxy eBPF replacement (strict mode) there is a conflicting kernel module that results in locked tx queues. This can be fixed by blacklisting

aoe_initwith extraKernelArgs. For more details: Cilium on talos “aoe: packet could not be sent on *. consider increasing tx_queue_len”There are some gotchas when using Talos and Cilium on the Google cloud platform when using internal load balancers. For more details: GCP ILB support / support scope local routes to be configured

Some kernel values changed by kube-proxy are not set to good defaults when running the cilium kernel-proxy alternative. For more details: Kernel default values (sysctl)

Other things to know

Talos has full kernel module support for eBPF, See:

Talos also includes the modules:

CONFIG_NETFILTER_XT_TARGET_TPROXY=mCONFIG_NETFILTER_XT_TARGET_CT=mCONFIG_NETFILTER_XT_MATCH_MARK=mCONFIG_NETFILTER_XT_MATCH_SOCKET=m

This allows you to set

--set enableXTSocketFallback=falseon the helm install/template command preventing Cilium from disabling theip_early_demuxkernel feature. This will win back some performance.

15 - Deploying Metrics Server

Metrics Server enables use of the Horizontal Pod Autoscaler and Vertical Pod Autoscaler. It does this by gathering metrics data from the kubelets in a cluster. By default, the certificates in use by the kubelets will not be recognized by metrics-server. This can be solved by either configuring metrics-server to do no validation of the TLS certificates, or by modifying the kubelet configuration to rotate its certificates and use ones that will be recognized by metrics-server.

Node Configuration

To enable kubelet certificate rotation, all nodes should have the following Machine Config snippet:

machine:

kubelet:

extraArgs:

rotate-server-certificates: true

Install During Bootstrap

We will want to ensure that new certificates for the kubelets are approved automatically. This can easily be done with the Kubelet Serving Certificate Approver, which will automatically approve the Certificate Signing Requests generated by the kubelets.

We can have Kubelet Serving Certificate Approver and metrics-server installed on the cluster automatically during bootstrap by adding the following snippet to the Cluster Config of the node that will be handling the bootstrap process:

cluster:

extraManifests:

- https://raw.githubusercontent.com/alex1989hu/kubelet-serving-cert-approver/main/deploy/standalone-install.yaml

- https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

Install After Bootstrap

If you choose not to use extraManifests to install Kubelet Serving Certificate Approver and metrics-server during bootstrap, you can install them once the cluster is online using kubectl:

kubectl apply -f https://raw.githubusercontent.com/alex1989hu/kubelet-serving-cert-approver/main/deploy/standalone-install.yaml

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

16 - Disaster Recovery

etcd database backs Kubernetes control plane state, so if the etcd service is unavailable

Kubernetes control plane goes down, and the cluster is not recoverable until etcd is recovered with contents.

The etcd consistency model builds around the consensus protocol Raft, so for highly-available control plane clusters,

loss of one control plane node doesn’t impact cluster health.

In general, etcd stays up as long as a sufficient number of nodes to maintain quorum are up.

For a three control plane node Talos cluster, this means that the cluster tolerates a failure of any single node,

but losing more than one node at the same time leads to complete loss of service.

Because of that, it is important to take routine backups of etcd state to have a snapshot to recover cluster from

in case of catastrophic failure.

Backup

Snapshotting etcd Database

Create a consistent snapshot of etcd database with talosctl etcd snapshot command:

$ talosctl -n <IP> etcd snapshot db.snapshot

etcd snapshot saved to "db.snapshot" (2015264 bytes)

snapshot info: hash c25fd181, revision 4193, total keys 1287, total size 3035136

Note: filename

db.snapshotis arbitrary.

This database snapshot can be taken on any healthy control plane node (with IP address <IP> in the example above),

as all etcd instances contain exactly same data.

It is recommended to configure etcd snapshots to be created on some schedule to allow point-in-time recovery using the latest snapshot.

Disaster Database Snapshot

If etcd cluster is not healthy, the talosctl etcd snapshot command might fail.

In that case, copy the database snapshot directly from the control plane node:

talosctl -n <IP> cp /var/lib/etcd/member/snap/db .

This snapshot might not be fully consistent (if the etcd process is running), but it allows

for disaster recovery when latest regular snapshot is not available.

Machine Configuration

Machine configuration might be required to recover the node after hardware failure. Backup Talos node machine configuration with the command:

talosctl -n IP get mc v1alpha1 -o yaml | yq eval '.spec' -

Recovery

Before starting a disaster recovery procedure, make sure that etcd cluster can’t be recovered:

- get

etcdcluster member list on all healthy control plane nodes withtalosctl -n IP etcd memberscommand and compare across all members. - query

etcdhealth across control plane nodes withtalosctl -n IP service etcd.

If the quorum can be restored, restoring quorum might be a better strategy than performing full disaster recovery procedure.

Latest Etcd Snapshot

Get hold of the latest etcd database snapshot.

If a snapshot is not fresh enough, create a database snapshot (see above), even if the etcd cluster is unhealthy.

Init Node

Make sure that there are no control plane nodes with machine type init:

$ talosctl -n <IP1>,<IP2>,... get machinetype

NODE NAMESPACE TYPE ID VERSION TYPE

172.20.0.2 config MachineType machine-type 2 controlplane

172.20.0.4 config MachineType machine-type 2 controlplane

172.20.0.3 config MachineType machine-type 2 controlplane

Nodes with init type are incompatible with etcd recovery procedure.

init node can be converted to controlplane type with talosctl edit mc --on-reboot command followed

by node reboot with talosctl reboot command.

Preparing Control Plane Nodes

If some control plane nodes experienced hardware failure, replace them with new nodes. Use machine configuration backup to re-create the nodes with the same secret material and control plane settings to allow workers to join the recovered control plane.

If a control plane node is healthy but etcd isn’t, wipe the node’s EPHEMERAL partition to remove the etcd

data directory (make sure a database snapshot is taken before doing this):

talosctl -n <IP> reset --graceful=false --reboot --system-labels-to-wipe=EPHEMERAL

At this point, all control plane nodes should boot up, and etcd service should be in the Preparing state.

Kubernetes control plane endpoint should be pointed to the new control plane nodes if there were any changes to the node addresses.

Recovering from the Backup

Make sure all etcd service instances are in Preparing state:

$ talosctl -n <IP> service etcd

NODE 172.20.0.2

ID etcd

STATE Preparing

HEALTH ?

EVENTS [Preparing]: Running pre state (17s ago)

[Waiting]: Waiting for service "cri" to be "up", time sync (18s ago)

[Waiting]: Waiting for service "cri" to be "up", service "networkd" to be "up", time sync (20s ago)

Execute the bootstrap command against any control plane node passing the path to the etcd database snapshot:

$ talosctl -n <IP> bootstrap --recover-from=./db.snapshot

recovering from snapshot "./db.snapshot": hash c25fd181, revision 4193, total keys 1287, total size 3035136

Note: if database snapshot was copied out directly from the

etcddata directory usingtalosctl cp, add flag--recover-skip-hash-checkto skip integrity check on restore.

Talos node should print matching information in the kernel log:

recovering etcd from snapshot: hash c25fd181, revision 4193, total keys 1287, total size 3035136

{"level":"info","msg":"restoring snapshot","path":"/var/lib/etcd.snapshot","wal-dir":"/var/lib/etcd/member/wal","data-dir":"/var/lib/etcd","snap-dir":"/var/li}

{"level":"info","msg":"restored last compact revision","meta-bucket-name":"meta","meta-bucket-name-key":"finishedCompactRev","restored-compact-revision":3360}

{"level":"info","msg":"added member","cluster-id":"a3390e43eb5274e2","local-member-id":"0","added-peer-id":"eb4f6f534361855e","added-peer-peer-urls":["https:/}

{"level":"info","msg":"restored snapshot","path":"/var/lib/etcd.snapshot","wal-dir":"/var/lib/etcd/member/wal","data-dir":"/var/lib/etcd","snap-dir":"/var/lib/etcd/member/snap"}

Now etcd service should become healthy on the bootstrap node, Kubernetes control plane components

should start and control plane endpoint should become available.

Remaining control plane nodes join etcd cluster once control plane endpoint is up.

Single Control Plane Node Cluster

This guide applies to the single control plane clusters as well.

In fact, it is much more important to take regular snapshots of the etcd database in single control plane node

case, as loss of the control plane node might render the whole cluster irrecoverable without a backup.

17 - Discovery

Video Walkthrough

To see a live demo of Cluster Discovery, see the video below:

Registries

Peers are aggregated from a number of optional registries.

By default, Talos will use the kubernetes and service registries.

Either one can be disabled.

To disable a registry, set disabled to true (this option is the same for all registries):

For example, to disable the service registry:

cluster:

discovery:

enabled: true

registries:

service:

disabled: true

Disabling all registries effectively disables member discovery altogether.

As of v0.14, Talos supports the

kubernetesandserviceregistries.

Kubernetes registry uses Kubernetes Node resource data and additional Talos annotations:

$ kubectl describe node <nodename>

Annotations: cluster.talos.dev/node-id: Utoh3O0ZneV0kT2IUBrh7TgdouRcUW2yzaaMl4VXnCd

networking.talos.dev/assigned-prefixes: 10.244.0.0/32,10.244.0.1/24

networking.talos.dev/self-ips: 172.20.0.2,fd83:b1f7:fcb5:2802:8c13:71ff:feaf:7c94

...

Service registry uses external Discovery Service to exchange encrypted information about cluster members.

Resource Definitions

Talos v0.14 introduces seven new resources that can be used to introspect the new discovery and KubeSpan features.

Discovery

Identities

The node’s unique identity (base62 encoded random 32 bytes) can be obtained with:

Note: Using base62 allows the ID to be URL encoded without having to use the ambiguous URL-encoding version of base64.

$ talosctl get identities -o yaml

...

spec:

nodeId: Utoh3O0ZneV0kT2IUBrh7TgdouRcUW2yzaaMl4VXnCd

Node identity is used as the unique Affiliate identifier.

Node identity resource is preserved in the STATE partition in node-identity.yaml file.

Node identity is preserved across reboots and upgrades, but it is regenerated if the node is reset (wiped).

Affiliates

An affiliate is a proposed member attributed to the fact that the node has the same cluster ID and secret.

$ talosctl get affiliates

ID VERSION HOSTNAME MACHINE TYPE ADDRESSES

2VfX3nu67ZtZPl57IdJrU87BMjVWkSBJiL9ulP9TCnF 2 talos-default-master-2 controlplane ["172.20.0.3","fd83:b1f7:fcb5:2802:986b:7eff:fec5:889d"]

6EVq8RHIne03LeZiJ60WsJcoQOtttw1ejvTS6SOBzhUA 2 talos-default-worker-1 worker ["172.20.0.5","fd83:b1f7:fcb5:2802:cc80:3dff:fece:d89d"]

NVtfu1bT1QjhNq5xJFUZl8f8I8LOCnnpGrZfPpdN9WlB 2 talos-default-worker-2 worker ["172.20.0.6","fd83:b1f7:fcb5:2802:2805:fbff:fe80:5ed2"]

Utoh3O0ZneV0kT2IUBrh7TgdouRcUW2yzaaMl4VXnCd 4 talos-default-master-1 controlplane ["172.20.0.2","fd83:b1f7:fcb5:2802:8c13:71ff:feaf:7c94"]

b3DebkPaCRLTLLWaeRF1ejGaR0lK3m79jRJcPn0mfA6C 2 talos-default-master-3 controlplane ["172.20.0.4","fd83:b1f7:fcb5:2802:248f:1fff:fe5c:c3f"]

One of the Affiliates with the ID matching node identity is populated from the node data, other Affiliates are pulled from the registries.

Enabled discovery registries run in parallel and discovered data is merged to build the list presented above.

Details about data coming from each registry can be queried from the cluster-raw namespace:

$ talosctl get affiliates --namespace=cluster-raw

ID VERSION HOSTNAME MACHINE TYPE ADDRESSES

k8s/2VfX3nu67ZtZPl57IdJrU87BMjVWkSBJiL9ulP9TCnF 3 talos-default-master-2 controlplane ["172.20.0.3","fd83:b1f7:fcb5:2802:986b:7eff:fec5:889d"]

k8s/6EVq8RHIne03LeZiJ60WsJcoQOtttw1ejvTS6SOBzhUA 2 talos-default-worker-1 worker ["172.20.0.5","fd83:b1f7:fcb5:2802:cc80:3dff:fece:d89d"]

k8s/NVtfu1bT1QjhNq5xJFUZl8f8I8LOCnnpGrZfPpdN9WlB 2 talos-default-worker-2 worker ["172.20.0.6","fd83:b1f7:fcb5:2802:2805:fbff:fe80:5ed2"]

k8s/b3DebkPaCRLTLLWaeRF1ejGaR0lK3m79jRJcPn0mfA6C 3 talos-default-master-3 controlplane ["172.20.0.4","fd83:b1f7:fcb5:2802:248f:1fff:fe5c:c3f"]

service/2VfX3nu67ZtZPl57IdJrU87BMjVWkSBJiL9ulP9TCnF 23 talos-default-master-2 controlplane ["172.20.0.3","fd83:b1f7:fcb5:2802:986b:7eff:fec5:889d"]

service/6EVq8RHIne03LeZiJ60WsJcoQOtttw1ejvTS6SOBzhUA 26 talos-default-worker-1 worker ["172.20.0.5","fd83:b1f7:fcb5:2802:cc80:3dff:fece:d89d"]

service/NVtfu1bT1QjhNq5xJFUZl8f8I8LOCnnpGrZfPpdN9WlB 20 talos-default-worker-2 worker ["172.20.0.6","fd83:b1f7:fcb5:2802:2805:fbff:fe80:5ed2"]

service/b3DebkPaCRLTLLWaeRF1ejGaR0lK3m79jRJcPn0mfA6C 14 talos-default-master-3 controlplane ["172.20.0.4","fd83:b1f7:fcb5:2802:248f:1fff:fe5c:c3f"]

Each Affiliate ID is prefixed with k8s/ for data coming from the Kubernetes registry and with service/ for data coming from the discovery service.

Members

A member is an affiliate that has been approved to join the cluster. The members of the cluster can be obtained with:

$ talosctl get members

ID VERSION HOSTNAME MACHINE TYPE OS ADDRESSES

talos-default-master-1 2 talos-default-master-1 controlplane Talos (v0.14.0) ["172.20.0.2","fd83:b1f7:fcb5:2802:8c13:71ff:feaf:7c94"]

talos-default-master-2 1 talos-default-master-2 controlplane Talos (v0.14.0) ["172.20.0.3","fd83:b1f7:fcb5:2802:986b:7eff:fec5:889d"]

talos-default-master-3 1 talos-default-master-3 controlplane Talos (v0.14.0) ["172.20.0.4","fd83:b1f7:fcb5:2802:248f:1fff:fe5c:c3f"]

talos-default-worker-1 1 talos-default-worker-1 worker Talos (v0.14.0) ["172.20.0.5","fd83:b1f7:fcb5:2802:cc80:3dff:fece:d89d"]

talos-default-worker-2 1 talos-default-worker-2 worker Talos (v0.14.0) ["172.20.0.6","fd83:b1f7:fcb5:2802:2805:fbff:fe80:5ed2"]

18 - Disk Encryption

It is possible to enable encryption for system disks at the OS level.

As of this writing, only STATE and EPHEMERAL partitions can be encrypted.

STATE contains the most sensitive node data: secrets and certs.

EPHEMERAL partition may contain some sensitive workload data.

Data is encrypted using LUKS2, which is provided by the Linux kernel modules and cryptsetup utility.

The operating system will run additional setup steps when encryption is enabled.

If the disk encryption is enabled for the STATE partition, the system will:

- Save STATE encryption config as JSON in the META partition.

- Before mounting the STATE partition, load encryption configs either from the machine config or from the META partition. Note that the machine config is always preferred over the META one.

- Before mounting the STATE partition, format and encrypt it. This occurs only if the STATE partition is empty and has no filesystem.

If the disk encryption is enabled for the EPHEMERAL partition, the system will:

- Get the encryption config from the machine config.

- Before mounting the EPHEMERAL partition, encrypt and format it. This occurs only if the EPHEMERAL partition is empty and has no filesystem.

Configuration

Right now this encryption is disabled by default. To enable disk encryption you should modify the machine configuration with the following options:

machine:

...

systemDiskEncryption:

ephemeral:

keys:

- nodeID: {}

slot: 0

state:

keys:

- nodeID: {}

slot: 0

Encryption Keys

Note: What the LUKS2 docs call “keys” are, in reality, a passphrase. When this passphrase is added, LUKS2 runs argon2 to create an actual key from that passphrase.

LUKS2 supports up to 32 encryption keys and it is possible to specify all of them in the machine configuration. Talos always tries to sync the keys list defined in the machine config with the actual keys defined for the LUKS2 partition. So if you update the keys list you should have at least one key that is not changed to be used for keys management.

When you define a key you should specify the key kind and the slot:

machine:

...

state:

keys:

- nodeID: {} # key kind

slot: 1

ephemeral:

keys:

- static:

passphrase: supersecret

slot: 0

Take a note that key order does not play any role on which key slot is used. Every key must always have a slot defined.

Encryption Key Kinds

Talos supports two kinds of keys:

nodeIDwhich is generated using the node UUID and the partition label (note that if the node UUID is not really random it will fail the entropy check).staticwhich you define right in the configuration.

Note: Use static keys only if your STATE partition is encrypted and only for the EPHEMERAL partition. For the STATE partition it will be stored in the META partition, which is not encrypted.

Key Rotation

It is necessary to do talosctl apply-config a couple of times to rotate keys, since there is a need to always maintain a single working key while changing the other keys around it.

So, for example, first add a new key:

machine:

...

ephemeral:

keys:

- static:

passphrase: oldkey

slot: 0

- static:

passphrase: newkey

slot: 1

...

Run:

talosctl apply-config -n <node> -f config.yaml

Then remove the old key:

machine:

...

ephemeral:

keys:

- static:

passphrase: newkey

slot: 1

...

Run:

talosctl apply-config -n <node> -f config.yaml

Going from Unencrypted to Encrypted and Vice Versa

Ephemeral Partition

There is no in-place encryption support for the partitions right now, so to avoid losing any data only empty partitions can be encrypted.

As such, migration from unencrypted to encrypted needs some additional handling, especially around explicitly wiping partitions.

apply-configshould be called with--on-rebootflag.- Partition should be wiped after

apply-config, but before the reboot.

Edit your machine config and add the encryption configuration:

vim config.yaml

Apply the configuration with --on-reboot flag:

talosctl apply-config -f config.yaml -n <node ip> --on-reboot

Wipe the partition you’re going to encrypt:

talosctl reset --system-labels-to-wipe EPHEMERAL -n <node ip> --reboot=true

That’s it! After you run the last command, the partition will be wiped and the node will reboot. During the next boot the system will encrypt the partition.

State Partition

Calling wipe against the STATE partition will make the node lose the config, so the previous flow is not going to work.

The flow should be to first wipe the STATE partition:

talosctl reset --system-labels-to-wipe STATE -n <node ip> --reboot=true

Node will enter into maintenance mode, then run apply-config with --insecure flag:

talosctl apply-config --insecure -n <node ip> -f config.yaml

After installation is complete the node should encrypt the STATE partition.

19 - Editing Machine Configuration

Talos node state is fully defined by machine configuration. Initial configuration is delivered to the node at bootstrap time, but configuration can be updated while the node is running.

Note: Be sure that config is persisted so that configuration updates are not overwritten on reboots. Configuration persistence was enabled by default since Talos 0.5 (

persist: truein machine configuration).

There are three talosctl commands which facilitate machine configuration updates:

talosctl apply-configto apply configuration from the filetalosctl edit machineconfigto launch an editor with existing node configuration, make changes and apply configuration backtalosctl patch machineconfigto apply automated machine configuration via JSON patch

Each of these commands can operate in one of three modes:

- apply change with a reboot (default): update configuration, reboot Talos node to apply configuration change

- apply change immediately (

--immediateflag): change is applied immediately without a reboot, only.clustersub-tree of the machine configuration can be updated in Talos 0.9 - apply change on next reboot (

--on-reboot): change is staged to be applied after a reboot, but node is not rebooted

Note: applying change on next reboot (

--on-reboot) doesn’t modify current node configuration, so next call totalosctl edit machineconfig --on-rebootwill not see changes

talosctl apply-config

This command is mostly used to submit initial machine configuration to the node (generated by talosctl gen config).

It can be used to apply new configuration from the file to the running node as well, but most of the time it’s not convenient, as it doesn’t operate on the current node machine configuration.

Example:

talosctl -n <IP> apply-config -f config.yaml

Command apply-config can also be invoked as apply machineconfig:

talosctl -n <IP> apply machineconfig -f config.yaml

Applying machine configuration immediately (without a reboot):

talosctl -n IP apply machineconfig -f config.yaml --immediate

taloctl edit machineconfig

Command talosctl edit loads current machine configuration from the node and launches configured editor to modify the config.

If config hasn’t been changed in the editor (or if updated config is empty), update is not applied.

Note: Talos uses environment variables

TALOS_EDITOR,EDITORto pick up the editor preference. If environment variables are missing,vieditor is used by default.

Example:

talosctl -n <IP> edit machineconfig

Configuration can be edited for multiple nodes if multiple IP addresses are specified:

talosctl -n <IP1>,<IP2>,... edit machineconfig

Applying machine configuration change immediately (without a reboot):

talosctl -n <IP> edit machineconfig --immediate

talosctl patch machineconfig

Command talosctl patch works similar to talosctl edit command - it loads current machine configuration, but instead of launching configured editor it applies JSON patch to the configuration and writes result back to the node.

Example, updating kubelet version (with a reboot):

$ talosctl -n <IP> patch machineconfig -p '[{"op": "replace", "path": "/machine/kubelet/image", "value": "ghcr.io/talos-systems/kubelet:v1.20.5"}]'

patched mc at the node <IP>

Updating kube-apiserver version in immediate mode (without a reboot):

$ talosctl -n <IP> patch machineconfig --immediate -p '[{"op": "replace", "path": "/cluster/apiServer/image", "value": "k8s.gcr.io/kube-apiserver:v1.20.5"}]'

patched mc at the node <IP>

Patch might be applied to multiple nodes when multiple IPs are specified:

taloctl -n <IP1>,<IP2>,... patch machineconfig --immediate -p '[{...}]'

Recovering from Node Boot Failures

If a Talos node fails to boot because of wrong configuration (for example, control plane endpoint is incorrect), configuration can be updated to fix the issue.

If the boot sequence is still running, Talos might refuse applying config in default mode.

In that case --on-reboot mode can be used coupled with talosctl reboot command to trigger a reboot and apply configuration update.

20 - KubeSpan

KubeSpan is a feature of Talos that automates the setup and maintenance of a full mesh WireGuard network for your cluster, giving you the ability to operate hybrid Kubernetes clusters that can span the edge, datacenter, and cloud. Management of keys and discovery of peers can be completely automated for a zero-touch experience that makes it simple and easy to create hybrid clusters.

Video Walkthrough

To learn more about KubeSpan, see the video below:

To see a live demo of KubeSpan, see one the videos below:

Enabling

Creating a New Cluster

To generate configuration files for a new cluster, we can use the --with-kubespan flag in talosctl gen config.

This will enable peer discovery and KubeSpan.

...

# Provides machine specific network configuration options.

network:

# Configures KubeSpan feature.

kubespan:

enabled: true # Enable the KubeSpan feature.

...

# Configures cluster member discovery.

discovery:

enabled: true # Enable the cluster membership discovery feature.

# Configure registries used for cluster member discovery.

registries:

# Kubernetes registry uses Kubernetes API server to discover cluster members and stores additional information

kubernetes: {}

# Service registry is using an external service to push and pull information about cluster members.

service: {}

...

# Provides cluster specific configuration options.

cluster:

id: yui150Ogam0pdQoNZS2lZR-ihi8EWxNM17bZPktJKKE= # Globally unique identifier for this cluster.

secret: dAmFcyNmDXusqnTSkPJrsgLJ38W8oEEXGZKM0x6Orpc= # Shared secret of cluster.

The default discovery service is an external service hosted for free by Sidero Labs. The default value is

https://discovery.talos.dev/. Contact Sidero Labs if you need to run this service privately.

Upgrading an Existing Cluster

In order to enable KubeSpan for an existing cluster, upgrade to the latest v0.14. Once your cluster is upgraded, the configuration of each node must contain the globally unique identifier, the shared secret for the cluster, and have KubeSpan and discovery enabled.

Note: Discovery can be used without KubeSpan, but KubeSpan requires at least one discovery registry.

Talos v0.11 or Less

If you are migrating from Talos v0.11 or less, we need to generate a cluster ID and secret.

To generate an id:

$ openssl rand -base64 32

EUsCYz+oHNuBppS51P9aKSIOyYvIPmbZK944PWgiyMQ=

To generate a secret:

$ openssl rand -base64 32

AbdsWjY9i797kGglghKvtGdxCsdllX9CemLq+WGVeaw=

Now, update the configuration of each node with the cluster with the generated id and secret.

You should end up with the addition of something like this (your id and secret should be different):

cluster:

id: EUsCYz+oHNuBppS51P9aKSIOyYvIPmbZK944PWgiyMQ=

secret: AbdsWjY9i797kGglghKvtGdxCsdllX9CemLq+WGVeaw=

Note: This can be applied in immediate mode (no reboot required) by passing

--immediateto either theedit machineconfigorapply-configsubcommands.

Talos v0.12

Enable kubespan and discovery.

machine:

network:

kubespan:

enabled: true

cluster:

discovery:

enabled: true

Resource Definitions

KubeSpanIdentities

A node’s WireGuard identities can be obtained with:

$ talosctl get kubespanidentities -o yaml

...

spec:

address: fd83:b1f7:fcb5:2802:8c13:71ff:feaf:7c94/128

subnet: fd83:b1f7:fcb5:2802::/64

privateKey: gNoasoKOJzl+/B+uXhvsBVxv81OcVLrlcmQ5jQwZO08=

publicKey: NzW8oeIH5rJyY5lefD9WRoHWWRr/Q6DwsDjMX+xKjT4=

Talos automatically configures unique IPv6 address for each node in the cluster-specific IPv6 ULA prefix.

Wireguard private key is generated for the node, private key never leaves the node while public key is published through the cluster discovery.

KubeSpanIdentity is persisted across reboots and upgrades in STATE partition in the file kubespan-identity.yaml.

KubeSpanPeerSpecs

A node’s WireGuard peers can be obtained with:

$ talosctl get kubespanpeerspecs

ID VERSION LABEL ENDPOINTS

06D9QQOydzKrOL7oeLiqHy9OWE8KtmJzZII2A5/FLFI= 2 talos-default-master-2 ["172.20.0.3:51820"]

THtfKtfNnzJs1nMQKs5IXqK0DFXmM//0WMY+NnaZrhU= 2 talos-default-master-3 ["172.20.0.4:51820"]

nVHu7l13uZyk0AaI1WuzL2/48iG8af4WRv+LWmAax1M= 2 talos-default-worker-2 ["172.20.0.6:51820"]

zXP0QeqRo+CBgDH1uOBiQ8tA+AKEQP9hWkqmkE/oDlc= 2 talos-default-worker-1 ["172.20.0.5:51820"]

The peer ID is the Wireguard public key.

KubeSpanPeerSpecs are built from the cluster discovery data.

KubeSpanPeerStatuses

The status of a node’s WireGuard peers can be obtained with:

$ talosctl get kubespanpeerstatuses

ID VERSION LABEL ENDPOINT STATE RX TX

06D9QQOydzKrOL7oeLiqHy9OWE8KtmJzZII2A5/FLFI= 63 talos-default-master-2 172.20.0.3:51820 up 15043220 17869488

THtfKtfNnzJs1nMQKs5IXqK0DFXmM//0WMY+NnaZrhU= 62 talos-default-master-3 172.20.0.4:51820 up 14573208 18157680

nVHu7l13uZyk0AaI1WuzL2/48iG8af4WRv+LWmAax1M= 60 talos-default-worker-2 172.20.0.6:51820 up 130072 46888

zXP0QeqRo+CBgDH1uOBiQ8tA+AKEQP9hWkqmkE/oDlc= 60 talos-default-worker-1 172.20.0.5:51820 up 130044 46556

KubeSpan peer status includes following information:

- the actual endpoint used for peer communication

- link state:

unknown: the endpoint was just changed, link state is not known yetup: there is a recent handshake from the peerdown: there is no handshake from the peer

- number of bytes sent/received over the Wireguard link with the peer